12. Finding signal and defining background | Background rate computation Analysis

With the CAST data fully reconstructed and energy calibrated, it is time to define the methods used to extract axion candidates and derive a background rate from the data. We first introduce the likelihood cut method in sec. 12.1 to motivate the need for reference X-ray data from an X-ray tube. Such data, taken at the CAST Detector Lab (CDL) will be discussed in depth in sec. 12.2. We then see how the reference data is used in the likelihood method to act as an event classifier in sec. 12.3. As an alternative to the likelihood cut, we will introduce another classifier in the form of a simple artificial neural network in sec. 12.4. This is another in depth discussion, as the selection of the training data and verification is non-trivial. With both classifiers discussed, it is time to include all other Septemboard detector features as additional vetoes in sec. 12.5. At the very end we will look at background rates for different cases, sec. 12.6, motivating the approach of our limit calculation in the next chapter.

Note: The methods discussed in this chapter are generally classifiers that predict how 'signal-like' a cluster is. Based on this prediction we will usually define a cut value as to keep a cluster as a potential signal. This means that if we apply the method to background data (that is, CAST data taken outside of solar trackings) we recover the 'background rate'; the irreducible amount of background left (at a certain efficiency), which is signal-like. If instead we apply the same methods to CAST solar tracking data we get instead a set of 'axion induced X-ray candidates'. 1 In the context of the chapter we commonly talk about "background rates", but the equivalent meaning in terms of tracking data and candidates should be kept in mind.

12.1. Likelihood method

The detection principle of the GridPix detector implies physically different kinds of events will have different geometrical shapes. An example can be seen in fig. 1(a), comparing the cluster eccentricity of \cefe calibration events with background data. This motivates usage of a geometry based approach to determine likely signal-like or background-like clusters. The method to distinguish the two types of events is a likelihood cut, based on the one in (Christoph Krieger 2018). It effectively assigns a single value to each cluster for the likelihood that it is a signal-like event.

Specifically, this likelihood method is based on three different geometric properties (also see sec. 9.4.2):

- the eccentricity \(ε\) of the cluster, determined by computing the long and short axis of the two dimensional cluster and then computing the ratio of the RMS of the projected positions of all active pixels within the cluster along each axis.

- the fraction of all pixels within a circle of the radius of one transverse RMS from the cluster center, \(f\).

- the length of the cluster (full extension along the long axis) divided by the transverse RMS, \(l\).

These variables are obviously highly correlated, but still provide a very good separation between the typical shapes of X-rays and background events. They mainly characterize the "spherical-ness" as well as the density near the center of the cluster, which is precisely the intuitive sense in which these type of events differ. For each of these properties we define a probability density function \(\mathcal{P}_i\), which can then be used to define the likelihood of a cluster with properties \((ε, f, l)\) to be signal-like:

\begin{equation} \label{eq:background:likelihood_def} \mathcal{L}(ε, f, l) = \mathcal{P}_{ε}(ε) \cdot \mathcal{P}_{f}(f) \cdot \mathcal{P}_l(l) \end{equation}where the subscript is denoting the individual probability density and the argument corresponds to the individual value of each property.

This raises the important question of what defines each individual probability density \(\mathcal{P}_i\). In principle it can be defined by computing a normalized density distribution of a known dataset, which contains representative signal-like data. The \cefe calibration data from CAST contains such representative data, if not for one problem: the properties used in the likelihood method are energy dependent, as seen in fig. 1(b), a comparison of the eccentricity of X-rays from the photopeak of the \cefe calibration source compared to those from the escape peak. The CAST calibration data can only characterize two different energies, but the expected axion signal is a (model dependent) continuous spectrum.

For this reason data was taken using an X-ray tube with 8 different target / filter combinations to provide the needed data to compute likelihood distributions for X-rays of a range of different energies. The details will be discussed in the next section, 12.2.

12.1.1. Generate plot of eccentricity signal vs. background extended

We will now generate a plot comparing the eccentricity of signal events from calibration data to background events. In addition another comparison will be made between photopeak photons and escape peak photons to show that events at different energies have different shapes, motivating the need for the X-ray tube data at different energies.

import ggplotnim, nimhdf5

import ingrid / [tos_helpers, ingrid_types]

proc read (f: string, run: int ): DataFrame =

withH5(f, "r" ):

result = h5f.readRunDsets(

run = run,

chipDsets = some( (chip: 3, dsets: @[ "eccentricity", "centerX", "centerY", "energyFromCharge" ] ) )

)

proc main (calib, back: string ) =

# read data from each file, one fixed run with good statistics

# cut on silver region

let dfC = read(calib, 128 )

let dfB = read(back, 124 )

var df = bind_rows( [ ( "Calibration", dfC), ( "Background", dfB) ], "Type" )

.filter(f{ float -> bool: inRegion(`centerX`, `centerY`, crSilver) },

f{ float: `eccentricity` < 10.0 } )

ggplot(df, aes( "eccentricity", fill = "Type" ) ) +

# geom_histogram(bins = 100,

# hdKind = hdOutline,

# position = "identity",

# alpha = 0.5,

# density = true) +

xlab( "Eccentricity" ) + ylab( "Density" ) +

geom_density(color = "black", size = 1.0, alpha = 0.7, normalize = true ) +

ggtitle( "Eccentricity of calibration and background data" ) +

themeLatex(fWidth = 0.5, width = 600, baseTheme = sideBySide) +

margin(left = 2.2, right = 5.2 ) +

ggsave( "Figs/background/eccentricity_calibration_background.pdf", useTeX = true, standalone = true )

proc splitPeaks (x: float ): string =

if x >= 2.75 and x <= 3.25:

"Escapepeak"

elif x >= 5.55 and x <= 6.25:

"Photopeak"

else:

"Unclear"

let dfP = dfC

.mutate(f{ float: "Peak" ~ splitPeaks(`energyFromCharge`) } )

.filter(f{ string: `Peak` != "Unclear" },

f{`eccentricity` <= 2.0 } )

ggplot(dfP, aes( "eccentricity", fill = "Peak" ) ) +

# geom_histogram(bins = 50,

# hdKind = hdOutline,

# position = "identity",

# alpha = 0.5,

# density = true) +

xlab( "Eccentricity" ) + ylab( "Density" ) +

geom_density(color = "black", size = 1.0, alpha = 0.7, normalize = true ) +

ggtitle(r"$^{55}\text{Fe}$ photopeak (5.9 keV) and escapepeak (3 keV)" ) +

themeLatex(fWidth = 0.5, width = 600, baseTheme = sideBySide) +

margin(left = 2.2, right = 5.2 ) +

ggsave( "Figs/background/eccentricity_photo_escape_peak.pdf", useTeX = true, standalone = true )

when isMainModule:

import cligen

dispatch main

yielding

and

12.2. CAST Detector Lab

In this section we will cover the X-ray tube data taken at the CAST Detector Lab (CDL) at CERN. First, we will show the setup in sec. 12.2.1. Next the different target / filter combinations that were used will be discussed and the measurements presented in sec. 12.2.2. These measurements then are used to define the probability densities for the likelihood method, see sec. 12.2.5. Further, in sec. 12.2.6, we cover a few more details on a linear interpolation we perform to compute a likelihood distribution at an arbitrary energy. And finally, they can be used to measure the energy resolution of the detector at different energies, sec. 12.2.9.

The data presented here was also part of the master thesis of Hendrik Schmick (Schmick 2019). Further, the selection of lines and approach follows the ideas used for the single GridPix detector in (Christoph Krieger 2018) with some notable differences. For a reasoning for one particular difference in terms of data treatment, see appendix 27.

Note: in the course of the CDL related sections the term target/filter combination (implicitly including the used high voltage setting) is used interchangeably with the main fluorescence line (or just 'the fluorescence line') targeted in a specific measurement. Be careful while reading about applied high voltages in \(\si{kV}\) and energies in \(\si{keV}\). The produced fluorescence lines typically have about 'half' the energy in \(\si{keV}\) as the applied voltage in \(\si{kV}\). See tab. 19 in sec. 12.2.2 for the precise relation.

12.2.1. CDL setup

The CAST detector lab provides a vacuum test stand, which contains an X-ray tube. A Micromegas-like detector can easily be mounted to the rear end of the test stand. An X-ray tube uses a filament to produce free electrons, which are then accelerated with a high voltage of the order of multiple \(\si{kV}\). The main part of the setup is a rotateable wheel inside the vacuum chamber, which contains a set of 18 different positions with 8 different target materials, as seen in tab. 17. The highly energetic electrons interacting with the target material generate a continuous Bremsstrahlung spectrum with characteristic lines depending on the target. A second rotateable wheel contains a set of 11 different filters, see tab. 18. These can be used to filter out undesired parts of the generated spectrum by choosing a filter that is opaque in the those energy ranges.

As mentioned previously in sec. 10.1, the detector was dismounted from CAST in Feb. 2019 and installed in the CAST detector lab on . The week from to X-ray tube data was taken in the CDL.

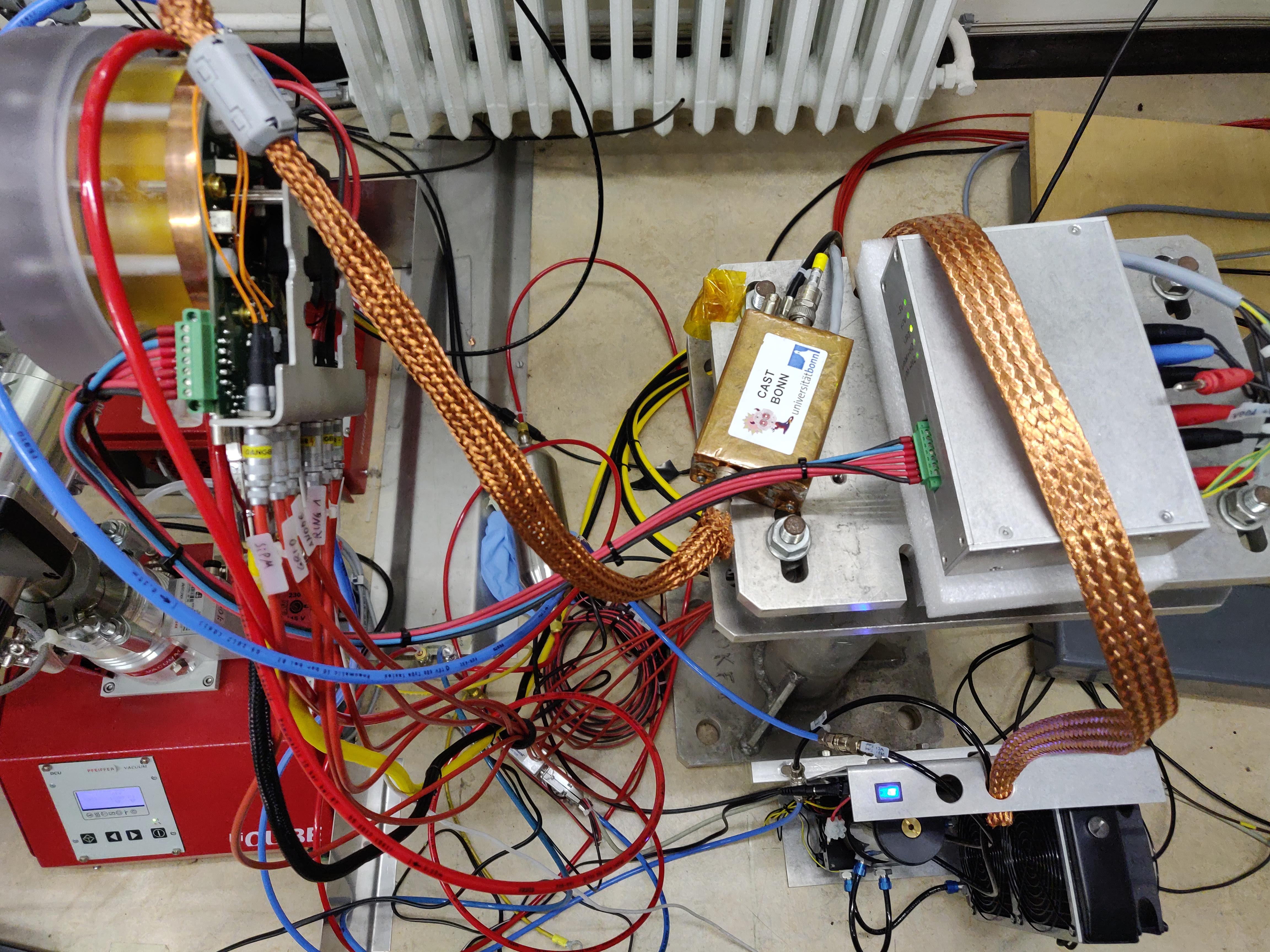

Fig. 2(a) shows the whole vacuum test stand, which contains the X-ray tube on the front left end and the Septemboard detector installed on the rear right, visible by the red HV cables and yellow HDMI cable. In fig. 2(b) we see the whole detector part from above, with the Septemboard detector installed to the vacuum test stand on the left side. The water cooling system is seen in the bottom right, with the power supply above that. The copper shielded box slightly right of the center is the Ortec pre-amplifier of the FADC, which is connected via a heavily shielded LEMO cable to the Septemboard detector. This cable was available in the CAST detector lab and proved invaluable for the measurements as it essentially removed any noise visible in the FADC signals (compared to the significant noise issues encountered at CAST, sec. 10.5.1). Given the activation threshold of the FADC with CAST amplifier settings (see sec. 11.4.2) at around \(\SI{2}{keV}\), the amplification needed to be adjusted in the CDL on a per-target basis. The shielded cable allowed the FADC to even act as a trigger for \(\SI{277}{eV}\) \(\ce{C}_{Kα}\) X-rays without any noise problems. 2

| Target Material | Position |

|---|---|

| Ti | 1 |

| Ag | 2 |

| Mn | 3 |

| C | 4 |

| BN | 5 |

| Au | 6 |

| Al | 7 - 11 |

| Cu | 12 - 18 |

| Filter material | Position |

|---|---|

| Ni 0.1 mm | 1 |

| Al 5 µm | 2 |

| PP G12 (EPIC) | 3 |

| PP G12 (EPIC) | 4 |

| Cu 10 µm | 5 |

| Ag 5 µm (99.97%) AG000130/24 | 6 |

| Fe 50 µm | 7 |

| Mn foil 50 µm (98.7% + permanent polyester) | 8 |

| Co 50 µm (99.99%) CO0000200 | 9 |

| Cr 40 µm (99.99% + permanent polyester) | 10 |

| Ti 50 µm (99.97%) TI000315 | 11 |

12.2.2. CDL measurements

The measurements were performed with 8 different target and filter combinations, with a higher density towards lower energies due to the nature of the expected solar axion flux. For each target and filter at least two runs were measured, one with and one without using the FADC as a trigger. The latter was taken to collect more statistics in a shorter amount of time, as the FADC readout slows down the data taking speed due to increased dead time. Table 19 provides an overview of all data taking runs, the target, filter and HV setting, the main X-ray fluorescence line targeted and its energy. Finally, the mean position of the main fluorescence line in the charge spectrum and its width as determined by a fit is shown. As can be seen the position moves in some cases significantly between different runs, for example in case of the \(\ce{Cu}-\ce{Ni}\) measurements. Also within a single run some stronger variation can be seen, evident by the much larger line width for example in run \(\num{320}\) compared to \(\num{319}\). See appendix sec. 26.4 for all measured spectra (pixel and charge spectra), where each plot contains all runs for a target / filter combination. For example fig. #fig:appendix:cdl_charge_Cu-Ni-15kV_by_run shows runs 319, 320 and 345 of the \(\ce{Cu}-\ce{Ni}\) measurements at \(\SI{15}{kV}\) with the strong variation in charge position of the peak visible between the runs.

The variability both between runs for the same target and filter as well as within a run shows the detector was undergoing gas gain changes similar to the variations at CAST (sec. 11.2). With an understanding that the variation is correlated to the temperature this can be explained. The small laboratory underwent significant temperature changes due to the presence of 3 people, all running machines and freely opening and closing windows, in particular due to very warm temperatures relative to a typical February in Geneva. 3 Fig. 3 shows the calculated gas gains (based on \(\SI{90}{min}\) intervals) for each run colored by the target/filter combination. It undergoes a strong, almost exponential change over the course of the data taking campaign. Because of this variation between runs, each run is treated fully separately in contrast to (Christoph Krieger 2018), where all runs for one target / filter combination were combined.

Fortunately, this significant change of gas gain does not have an impact on the distributions of the cluster properties. See appendix 27.1 for comparisons of the cluster properties of the different runs (and thus different gas gains) for each target and filter combination.

As the main purpose is to use the CDL data to generate reference distributions for certain cluster properties, the relevant clusters that correspond to known X-ray energies must be extracted from the data. This is done in two different ways:

- A set of fixed cuts (one set for each target/filter combination) is applied to each run, as presented in tab. 20. This is the same set as used in (Christoph Krieger 2018). Its main purpose is to remove events with multiple clusters and potential background contributions.

- By cutting around the main fluorescence line in the charge spectrum in a \(3σ\) region, for which the spectrum needs to be fitted with the expected lines, see sec. 12.2.3. This is done on a run-by-run basis.

The remaining data after both sets of cuts can then be combined for each target/filter combination to make up the distributions for the cluster properties as needed for the likelihood method.

For a reference of the X-ray fluorescence lines (for more exact values and \(\alpha_1\), \(\alpha_2\) values etc.) see tab. 1. The used EPIC filter refers to a filter developed for the EPIC camera of the XMM-Newton telescope. It is a bilayer of \(\SI{1600}{\angstrom}\) polyimide and \(\SI{800}{\angstrom}\) aluminium. For more information about the EPIC filters see references (Strüder et al. 2001; Turner, M. J. L. et al. 2001; Barbera et al. 2003, 2016), in particular (Barbera et al. 2016) for an overview of the materials and production.

| Run | FADC? | Target | Filter | HV [kV] | Line | Energy [keV] | μ [e⁻] | σ [e⁻] | σ/μ |

|---|---|---|---|---|---|---|---|---|---|

| 319 | y | Cu | Ni | 15 | \(\ce{Cu}\) \(\text{K}_{\alpha}\) | 8.04 | \(\num{9.509(21)e+05}\) | \(\num{7.82(18)e+04}\) | \(\num{8.22(19)e-02}\) |

| 320 | n | Cu | Ni | 15 | \(\num{9.102(22)e+05}\) | \(\num{1.010(19)e+05}\) | \(\num{1.110(21)e-01}\) | ||

| 345 | y | Cu | Ni | 15 | \(\num{6.680(12)e+05}\) | \(\num{7.15(11)e+04}\) | \(\num{1.070(16)e-01}\) | ||

| 315 | y | Mn | Cr | 12 | \(\ce{Mn}\) \(\text{K}_{\alpha}\) | 5.89 | \(\num{6.321(29)e+05}\) | \(\num{9.44(26)e+04}\) | \(\num{1.494(41)e-01}\) |

| 323 | n | Mn | Cr | 12 | \(\num{6.328(11)e+05}\) | \(\num{7.225(89)e+04}\) | \(\num{1.142(14)e-01}\) | ||

| 347 | y | Mn | Cr | 12 | \(\num{4.956(10)e+05}\) | \(\num{6.211(82)e+04}\) | \(\num{1.253(17)e-01}\) | ||

| 325 | y | Ti | Ti | 9 | \(\ce{Ti}\) \(\text{K}_{\alpha}\) | 4.51 | \(\num{4.83(31)e+05}\) | \(\num{4.87(83)e+04}\) | \(\num{1.01(18)e-01}\) |

| 326 | n | Ti | Ti | 9 | \(\num{4.615(87)e+05}\) | \(\num{4.93(25)e+04}\) | \(\num{1.068(57)e-01}\) | ||

| 349 | y | Ti | Ti | 9 | \(\num{3.90(23)e+05}\) | \(\num{4.57(57)e+04}\) | \(\num{1.17(16)e-01}\) | ||

| 328 | y | Ag | Ag | 6 | \(\ce{Ag}\) \(\text{L}_{\alpha}\) | 2.98 | \(\num{3.0682(97)e+05}\) | \(\num{3.935(79)e+04}\) | \(\num{1.283(26)e-01}\) |

| 329 | n | Ag | Ag | 6 | \(\num{3.0349(51)e+05}\) | \(\num{4.004(40)e+04}\) | \(\num{1.319(13)e-01}\) | ||

| 351 | y | Ag | Ag | 6 | \(\num{2.5432(63)e+05}\) | \(\num{3.545(49)e+04}\) | \(\num{1.394(20)e-01}\) | ||

| 332 | y | Al | Al | 4 | \(\ce{Al}\) \(\text{K}_{\alpha}\) | 1.49 | \(\num{1.4868(50)e+05}\) | \(\num{2.027(38)e+04}\) | \(\num{1.364(26)e-01}\) |

| 333 | n | Al | Al | 4 | \(\num{1.3544(30)e+05}\) | \(\num{2.539(24)e+04}\) | \(\num{1.875(18)e-01}\) | ||

| 335 | y | Cu | EPIC | 2 | \(\ce{Cu}\) \(\text{L}_{\alpha}\) | 0.930 | \(\num{8.885(99)e+04}\) | \(\num{1.71(11)e+04}\) | \(\num{1.93(13)e-01}\) |

| 336 | n | Cu | EPIC | 2 | \(\num{7.777(94)e+04}\) | \(\num{2.39(14)e+04}\) | \(\num{3.08(19)e-01}\) | ||

| 337 | n | Cu | EPIC | 2 | \(\num{7.86(15)e+04}\) | \(\num{2.47(11)e+04}\) | \(\num{3.14(15)e-01}\) | ||

| 339 | y | Cu | EPIC | 0.9 | \(\ce{O }\) \(\text{K}_{\alpha}\) | 0.525 | \(\num{5.77(11)e+04}\) | \(\num{1.38(22)e+04}\) | \(\num{2.39(39)e-01}\) |

| 340 | n | Cu | EPIC | 0.9 | \(\num{4.778(31)e+04}\) | \(\num{1.230(50)e+04}\) | \(\num{2.58(11)e-01}\) | ||

| 342 | y | C | EPIC | 0.6 | \(\ce{C }\) \(\text{K}_{\alpha}\) | 0.277 | \(\num{4.346(36)e+04}\) | \(\num{1.223(29)e+04}\) | \(\num{2.814(70)e-01}\) |

| 343 | n | C | EPIC | 0.6 | \(\num{3.952(20)e+04}\) | \(\num{1.335(14)e+04}\) | \(\num{3.379(40)e-01}\) |

| Target | Filter | line | HV | length | RMSTmin | RMSTmax | eccentricity |

|---|---|---|---|---|---|---|---|

| Cu | Ni | \(\ce{Cu}\) \(\text{K}_{\alpha}\) | 15 | 0.1 | 1.0 | 1.3 | |

| Mn | Cr | \(\ce{Mn}\) \(\text{K}_{\alpha}\) | 12 | 0.1 | 1.0 | 1.3 | |

| Ti | Ti | \(\ce{Ti}\) \(\text{K}_{\alpha}\) | 9 | 0.1 | 1.0 | 1.3 | |

| Ag | Ag | \(\ce{Ag}\) \(\text{L}_{\alpha}\) | 6 | 6.0 | 0.1 | 1.0 | 1.4 |

| Al | Al | \(\ce{Al}\) \(\text{K}_{\alpha}\) | 4 | 0.1 | 1.1 | 2.0 | |

| Cu | EPIC | \(\ce{Cu}\) \(\text{L}_{\alpha}\) | 2 | 0.1 | 1.1 | 2.0 | |

| Cu | EPIC | \(\ce{O }\) \(\text{K}_{\alpha}\) | 0.9 | 0.1 | 1.1 | 2.0 | |

| C | EPIC | \(\ce{C }\) \(\text{K}_{\alpha}\) | 0.6 | 6.0 | 0.1 | 1.1 |

12.2.2.1. Table of fit lines extended

This is the full table.

| cuNi15 | mnCr12 | tiTi9 | agAg6 | alAl4 | cuEpic2 | cuEpic09 | cEpic06 |

|---|---|---|---|---|---|---|---|

| \(\text{EG}(\ce{Cu}_{Kα})\) | \(\text{EG}(\ce{Mn}_{Kα})\) | \(\text{EG}(\ce{Ti}_{Kα})\) | \(\text{EG}(\ce{Ag}_{Lα})\) | \(\text{EG}(Al_{Kα})\) | \(\text{G}(\ce{Cu}_{Lα})\) | \(\text{G}(O_{Kα})\) | \(\text{G}(\ce{C}_{Kα})\) |

| \(\text{EG}(\ce{Cu}^{\text{esc}})\) | \(\text{G}(\ce{Mn}^{\text{esc}})\) | \(\text{G}(\ce{Ti}^{\text{esc}}_{Kα})\) | G: | G: | #G: | G: | |

| #name = \(\ce{Ti}^{\text{esc}}_{Kα}\) | name = \(\ce{Ag}_{Lβ}\) | name = \(\ce{Cu}_{Lβ}\) | # name = \(\ce{C}_{Kα}\) | name = \(\ce{O}_{Kα}\) | |||

| # \(μ = eμ(\ce{Ti}_{Kα}) · \frac{1.537}{4.511}\) | \(N = eN(\ce{Ag}_{Lα}) · 0.1\) | \(N = N(\ce{Cu}_{Lα}) / 5.0\) | # \(μ = μ(O_{Kα}) · (0.277/0.525)\) | \(μ = μ(\ce{C}_{Kα}) · \frac{0.525}{0.277}\) | |||

| G: | \(μ = eμ(\ce{Ag}_{Lα}) · \frac{3.151}{2.984}\) | \(μ = μ(\ce{Cu}_{Lα}) · \frac{0.9498}{0.9297}\) | # \(σ = σ(O_{Kα})\) | \(σ = σ(\ce{C}_{Kα})\) | |||

| name = \(\ce{Ti}^{\text{esc}}_{Kβ}\) | \(σ = eσ(\ce{Ag}_{Lα})\) | \(σ = σ(\ce{Cu}_{Lα})\) | #G: | ||||

| \(μ = eμ(\ce{Ti}_{Kα}) · \frac{1.959}{4.511}\) | G: | # name = \(\ce{Fe}_{Lα}β\) | |||||

| \(σ = σ(\ce{Ti}^{\text{esc}}_{Kα})\) | name = \(\ce{O}_{Kα}\) | # \(μ = μ(O_{Kα}) · \frac{0.71}{0.525}\) | |||||

| G: | \(N = N(\ce{Cu}_{Lα}) / 3.5\) | # \(σ = σ(O_{Kα})\) | |||||

| name = \(\ce{Ti}_{Kβ}\) | \(μ = μ(\ce{Cu}_{Lα}) · \frac{0.5249}{0.9297}\) | #G: | |||||

| \(μ = eμ(\ce{Ti}_{Kα}) · \frac{4.932}{4.511}\) | \(σ = σ(\ce{Cu}_{Lα}) / 2.0\) | # name = \(\ce{Ni}_{Lα}β\) | |||||

| \(σ = eσ(\ce{Ti}_{Kα})\) | # \(μ = μ(O_{Kα}) · \frac{0.86}{0.525}\) | ||||||

| cuNi15 Q | mnCr12 Q | tiTi9 Q | agAg6 Q | alAl4 Q | cuEpic2 Q | cuEpic09 Q | cEpic06 Q |

| \(\text{G}(\ce{Cu}_{Kα})\) | \(\text{G}(\ce{Mn}_{Kα})\) | \(\text{G}(\ce{Ti}_{Kα})\) | \(\text{G}(\ce{Ag}_{Lα})\) | \(\text{G}(Al_{Kα})\) | \(\text{G}(\ce{Cu}_{Lα})\) | \(\text{G}(O_{Kα})\) | \(\text{G}(\ce{C}_{Kα})\) |

| \(\text{G}(\ce{Cu}^{\text{esc}})\) | \(\text{G}(\ce{Mn}^{\text{esc}})\) | \(\text{G}(\ce{Ti}^{\text{esc}}_{Kα})\) | G: | G: | G: | G: | |

| #name = \(\ce{Ti}^{\text{esc}}_{Kα}\) | name = \(\ce{Ag}_{Lβ}\) | name = \(\ce{Cu}_{Lβ}\) | name = \(\ce{C}_{Kα}\) | name = \(\ce{O}_{Kα}\) | |||

| # \(μ = eμ(\ce{Ti}_{Kα}) · \frac{1.537}{4.511}\) | \(N = N(\ce{Ag}_{Lα}) · 0.1\) | \(N = N(\ce{Cu}_{Lα}) / 5.0\) | \(N = N(O_{Kα}) / 10.0\) | \(μ = μ(\ce{C}_{Kα}) · \frac{0.525}{0.277}\) | |||

| G: | \(μ = μ(\ce{Ag}_{Lα}) · \frac{3.151}{2.984}\) | \(μ = μ(\ce{Cu}_{Lα}) · \frac{0.9498}{0.9297}\) | \(μ = μ(O_{Kα}) · \frac{277.0}{524.9}\) | \(σ = σ(\ce{C}_{Kα})\) | |||

| name = \(\ce{Ti}^{\text{esc}}_{Kβ}\) | \(σ = σ(\ce{Ag}_{Lα})\) | \(σ = σ(\ce{Cu}_{Lα})\) | \(σ = σ(O_{Kα})\) | ||||

| \(μ = μ(\ce{Ti}_{Kα}) · \frac{1.959}{4.511}\) | # $\text{G}: | ||||||

| \(σ = σ(\ce{Ti}^{\text{esc}}_{Kα})\) | # name = \(\ce{O}_{Kα}\) | ||||||

| G: | # \(N = N(\ce{Cu}_{Lα}) / 4.0\) | ||||||

| name = \(\ce{Ti}_{Kβ}\) | # \(μ = μ(\ce{Cu}_{Lα}) · \frac{0.5249}{0.9297}\) | ||||||

| \(μ = μ(\ce{Ti}_{Kα}) · \frac{4.932}{4.511}\) | # \(σ = σ(\ce{Cu}_{Lα}) / 2.0\) | ||||||

| \(σ = σ(\ce{Ti}_{Kα})\) |

12.2.2.2. Extra info on target materials extended

[X]EXTEND THIS TO INCLUDE CITATIONS[ ]ADD OUR OWN POLYIAMID + ALUMINUM TRANSMISSION PLOT -> 1600 Å polyimide + 800 Å Al -> Q: What do we use for the polyimide? Guess below text can tell us the atomic fractions in principle.

EPIC filters: https://www.cosmos.esa.int/web/xmm-newton/technical-details-epic section 6 about filters contains:

There are four filters in each EPIC camera. Two are thin filters made of 1600 Å of poly-imide film with 400 Å of aluminium evaporated on to one side; one is the medium filter made of the same material but with 800 Å of aluminium deposited on it; and one is the thick filter. This is made of 3300 Å thick Polypropylene with 1100 Å of aluminium and 450 Å of tin evaporated on the film.

i.e. the EPIC filters contain aluminum. That could explain why the Cu-EPIC 2kV data contains something that might be either aluminum fluorescence or at least just continuous spectrum that is not filtered due to the absorption edge of aluminum there!

Relevant references: (Strüder et al. 2001; Turner, M. J. L. et al. 2001; Barbera et al. 2003, 2016)

In particular (Barbera et al. 2016) contains much more details about the EPIC filters and its actual composition:

Filter manufacturing process The EPIC Thin and Medium filters manufactured by MOXTEX consist of a thin film of polyimide, with nominal thickness of 160 nm, coated with a single layer of aluminum whose nominal thickness is 40 nm for the Thin and 80 nm for the Medium filters, respectively. The polyimide thin films are produced by spin-coating of a polyamic acid (PAA) solution obtained by dissolving two precursor monomers (an anhydride and an amine) in an organic polar solvent. For the EPIC Thin and Medium filters the two precursors are the Biphenyldianhydride (BPDA) and the p-Phenyldiamine (PDA) (Dupont PI-2610), and the solvent is N-methyl-2-pyrrolidone (NMP) and Propylene Glycol Monomethyl Ether (Dupont T9040 thinner). To convert the PAA into polyimide, the solution is heated up to remove the NMP and to induce the imidization through the evaporation of water molecules. The film thickness is controlled by spin coating parameters, PAA viscosity, and curing temperature [19]. The polyimide thin membrane is attached with epoxy onto a transfer ring and the aluminum is evaporated in a few runs, distributed over 2–3 days, each one depositing a metal layer of about 20 nm thickness.

The EPIC Thin and Medium flight qualified filters have been manufactured during a period of 1 year, from January’96 to January’97. Table 1 lists the full set of flight-qualified filters (Flight Model and Flight Spare) delivered to the EPIC consortium, together with their most relevant parameters. Along with the production of the flight qualified filters, the prototypes and the qualification filters (not included in this list) have been manufactured and tested for the construction of the filter transmission model and to assess the stability in time of the Optical/UV transparency (opacity). Among these qualification filters are T4, G12, G18, and G19 that have been previously mentioned.

Further it states that 'G12' refers to the medium filter

UV/Vis transmission measurements in the range 190–1000 nm have been performed between May 1997 and July 2002 on one Thin (T4) and one medium (G12) EPIC on-ground qualification filters to monitor their time stability [16].

PP G12 is the name written in the CDL documentation! Mystery solved.

12.2.2.3. Reconstruct all CDL data extended

Reconstructing all CDL data is done by either using runAnalysisChain

on the directory (currently not tested) or by manually running

raw_data_manipulation and reconstruction as follows:

Take note that you may change the paths of course. The paths chosen here are those in use during the writing process of the thesis.

cd ~/CastData/data/CDL_2019

raw_data_manipulation -p . -r Xray -o ~/CastData/data/CDL_2019/CDL_2019_Raw.h5

And now for the reconstruction:

cd ~/CastData/data/CDL_2019

reconstruction -i ~/CastData/data/CDL_2019/CDL_2019_Raw.h5 -o ~/CastData/data/CDL_2019/CDL_2019_Reco.h5

reconstruction -i ~/CastData/data/CDL_2019/CDL_2019_Reco.h5 --only_charge

reconstruction -i ~/CastData/data/CDL_2019/CDL_2019_Reco.h5 --only_fadc

reconstruction -i ~/CastData/data/CDL_2019/CDL_2019_Reco.h5 --only_gas_gain

reconstruction -i ~/CastData/data/CDL_2019/CDL_2019_Reco.h5 --only_energy_from_e

At this point the reconstructed CDL H5 file is generally done and ready to be used in the next section.

12.2.2.4. Generate plots and tables for this section extended

To generate the plots of the above section (and much more) as well as

the table summarizing all the runs and their fits, we continue

with the cdl_spectrum_creation tool as follows:

Make sure the config file uses fitByRun to reproduce the same plots!

ESCAPE_LATEX performs replacement of characters like & used in

titles.

F_WIDTH=0.9 ESCAPE_LATEX=true USE_TEX=true \

cdl_spectrum_creation \

-i ~/CastData/data/CDL_2019/CDL_2019_Reco.h5 \

--cutcdl --dumpAccurate --hideNloptFit \

--plotPath ~/phd/Figs/CDL/fWidth0.9/

Note:

This generates lots of plots, all placed in the output directory. The

fWidth0.9 subdirectory is because here we wish to produce them with

slightly smaller fonts as the single charge spectrum we use in the

next section is inserted standalone.

Among them are:

- plots of the target / filter kinds with all fits and the raw / cut data as histogram

- plots of histograms of the raw data split by run -> useful to see the detector variability!

- energy resolution plot

- peak position of hits / charge vs energy (to see if indeed linear)

- calibrated, normalized histogram / kde plot of the target/filter combinations in energy (calibrated using the main line that was fitted)

- ridgeline plots of KDEs of all the geometric cluster properties split by target/filter kind and run

- gas gain of each gas gain slice in the CDL data, split by run & target filter kind

- temperature data of those CDL runs that contain it

- finally it generates the (almost complete) tables as shown in the above section for the data overview including μ, σ and μ/σ

Note about temperature plots:

-

-> This plot including the IMB temperature shows us precisely what we expect: the temperature of the IMB and the Septemboard is directly related and just an offset of one another. This is very valuable information to have as a reference

-

-

As we ran the command from /tmp/ the output plots will be in a

/tmp/out/CDL* directory. We copy over the generated files files

including calibrated_cdl_energy_histos.pdf and

calibrated_cdl_energy_kde.pdf here to /phd/Figs/CDL/.

Finally, running the code snippet as mentioned above also produces table 19 as well as the equivalent for the pixel spectra and writes them to stdout at the end!

12.2.2.5. Generate the calibration-cdl-2018.h5 file extended

This is also done using the cdl_spectrum_creation. Assuming the

reconstructed CDL H5 file exists, it is as simple as:

cdl_spectrum_creation -i ~/CastData/data/CDL_2019/CDL_2019_Reco.h5 --genCdlFile \

--outfile ~/CastData/data/CDL_2019/calibration-cdl

which generates calibration-cdl-2018.h5 for us.

Make sure the config file uses fitByRun to reproduce the same plots!

12.2.2.6. Get the table of fit lines from code extended

Our code cdl_spectrum_creation can be used to output the final

fitting functions in a format like the table inserted in the main

text, thanks to using a CT declarative definition of the fit

functions.

We do this by running:

cdl_spectrum_creation --printFunctions

12.2.2.7. Historical weather data for Geneva during CDL data taking extended

More or less location of CDL (side of building 17 at Meyrin CERN site):

46.22965, 6.04984

(https://www.openstreetmap.org/search?whereami=1&query=46.22965%2C6.04984#map=19/46.22965/6.04984)

Here we can see historic weather data plots from Meyrin from the relevant time range: https://meteostat.net/en/place/ch/meyrin?s=06700&t=2019-02-15/2019-02-21

This at least proves the weather was indeed very nice outside, sunny and over 10°C peak temperatures during the day!

I exported the data and it's available here: ./resources/weather_data_meyrin_cdl_data_taking.csv

Legend of the columns:

| # | Column | Description |

|---|---|---|

| 1 | time | Time |

| 2 | temp | Temperature |

| 3 | dwpt | Dew Point |

| 4 | rhum | Relative Humidity |

| 5 | prcp | Total Precipitation |

| 6 | snow | Snow Depth |

| 7 | wdir | Wind Direction |

| 8 | wspd | Wind Speed |

| 9 | wpgt | Peak Gust |

| 10 | pres | Air Pressure |

| 11 | tsun | Sunshine Duration |

| 12 | coco | Weather Condition Code |

import ggplotnim, times

var df = readCsv( "/home/basti/phd/resources/weather_data_meyrin_cdl_data_taking.csv" )

.mutate(f{ string -> int: "timestamp" ~ parseTime(`time`, "yyyy-MM-dd HH:mm:ss", local() ).toUnix() } )

.rename(f{ "Temperature [°C]" <- "temp" }, f{ "Pressure [mbar]" <- "pres" } )

df = df.gather( [ "Temperature [°C]", "Pressure [mbar]" ], "Data", "Value" )

echo df

ggplot(df, aes( "timestamp", "Value", color = "Data" ) ) +

facet_wrap( "Data", scales = "free" ) +

facetMargin( 0.5 ) +

geom_line() +

xlab( "Date", rotate = -45.0, alignTo = "right", margin = 2.0 ) +

margin(bottom = 2.5, right = 4.75 ) +

legendPosition( 0.84, 0.0 ) +

scale_x_date(isTimestamp = true,

dateSpacing = initDuration(hours = 12 ),

formatString = "yyyy-MM-dd HH:mm",

timeZone = local() ) +

ggtitle( "Meyrin, Geneva weather during CDL data taking campaign" ) +

ggsave( "/home/basti/phd/Figs/CDL/weather_meyrin_during_cdl_data_taking.pdf", width = 1000, height = 600 )

The weather during the data taking campaign is shown in fig. 4. It's good to see my memory served me right.

12.2.3. Charge spectra of the CDL data

To the spectra of each run of the charge data a mixture of Gaussian functions is fitted. 4

Specifically the Gaussian expressed as

\begin{equation} G(E; \mu, \sigma, N) = \frac{N}{\sqrt{2 \pi}} \exp\left(-\frac{(E - \mu)^2}{2\sigma^2}\right), \end{equation}is used and will be referenced as \(G\) with possible arguments from here on. Note that while the physical X-ray transition lines are Lorentzian shaped (Weisskopf and Wigner 1997), the lines as detected by a gaseous detector are entirely dominated by detector resolution, resulting in Gaussian lines. For other types of detectors used in X-ray fluorescence (XRF) analysis, convolutions of Lorentzian and Gaussian distributions are used (Huang and Lim 1986; Heckel and Scholz 1987), called pseudo Voigt functions (Roberts, Riddle, and Squier 1975; Gunnink 1977).

The functions fitted to the different spectra then depend on which fluorescence lines are visible. The full list of all combinations is shown in tab. 22. Typically each line that is expected from the choice of target, filter and chosen voltage is fitted, if it can be visually identified in the data. 5 If no 'argument' is shown in the table to \(G\) it means each parameter (\(N, μ, σ\)) is fitted. Any specific argument given implies that parameter is fixed relative to another parameter. For example \(μ^{\ce{Ag}}_{Lα}·\left(\frac{3.151}{2.984}\right)\) fixes the \(Lβ\) line of silver to the fit parameter \(μ^{\ce{Ag}}_{Lα}\) of the \(Lα\) line with a multiplicative shift based on the relative eneries of \(Lα\) to \(Lβ\). In some cases the amplitude is fixed between different lines where relative amplitudes cannot be easily predicted or determined, e.g. in one of the \(\ce{Cu}-\text{EPIC}\) runs, the \(\ce{C}_{Kα}\) line is fixed to a tenth of the \(\ce{O}_{Kα}\) line. This is done to get a good fit based on trial and error.

Finally, in both \(\ce{Cu}-\text{EPIC}\) lines two 'unknown' Gaussians are added to cover the behavior of the data at higher charges. The physical origin of this additional contribution is not entirely clear. The used EPIC filter contains an aluminum coating (Barbera et al. 2016). As such it has the aluminum absorption edge at about \(\SI{1.5}{keV}\), possibly matching the additional contribution for the \(\SI{2}{kV}\) dataset. Whether it is from a continuous part of the spectrum or a form of aluminum fluorescence is not clear however. This explanation does not work in the \(\SI{0.9}{kV}\) case, which is why the line is deemed 'unknown'. It may also be a contribution of the specific polyimide used in the EPIC filter (Barbera et al. 2016). Another possibility is it is a case of multi-cluster events, which are too close to be split, but with properties close enough to a single X-ray as to not be removed by the cleaning cuts (which gets more likely the lower the energy is).

The full set of all fits (including the pixel spectra) is shown in appendix 26.4. Fig. 5 shows the charge spectrum of the \(\ce{Ti}\) target and \(\ce{Ti}\) filter at \(\SI{9}{kV}\) for one of the runs. These plots show the raw data in the green histogram and the data left after application of the cleaning cuts (tab. 20) in the purple histogram. The black line is the result of the fit as described in tab. 22 with the resulting parameters shown in the box (parameters that were fixed are not shown). The black straight lines with grey error bands represent the \(3σ\) region around the main fluorescence line, which is used to extract those clusters likely from the fluorescence line and therefore known energy.

\footnotesize

| Target | Filter | HV [kV] | Fit functions |

|---|---|---|---|

| Cu | Ni | 15 | \(G^{\ce{Cu}}_{Kα} + G^{\ce{Cu}, \text{esc}}_{Kα}\) |

| Mn | Cr | 12 | \(G^{\ce{Mn}}_{Kα} + G^{\ce{Mn}, \text{esc}}_{Kα}\) |

| Ti | Ti | 9 | \(G^{\ce{Ti}}_{Kα} + G^{\ce{Ti}, \text{esc}}_{Kα} + G^{\ce{Ti}}_{Kβ}\left( μ^{\ce{Ti}}_{Kα}·\left(\frac{4.932}{4.511}\right), σ^{\ce{Ti}}_{Kα} \right) + G^{\ce{Ti}, \text{esc}}_{Kβ}\left( μ^{\ce{Ti}}_{Kα}·\left(\frac{1.959}{4.511}\right), σ^{\ce{Ti}, \text{esc}}_{Kα} \right)\) |

| Ag | Ag | 6 | \(G^{\ce{Ag}}_{Lα} + G^{\ce{Ag}}_{Lβ}\left( N^{\ce{Ag}}_{Lα}·0.56, μ^{\ce{Ag}}_{Lα}·\left(\frac{3.151}{2.984}\right), σ^{\ce{Ag}}_{Lα} \right)\) |

| Al | Al | 4 | \(G^{\ce{Al}}_{Kα}\) |

| Cu | EPIC | 2 | \(G^{\ce{Cu}}_{Lα} + G^{\ce{Cu}}_{Lβ}\left( N^{\ce{Cu}}_{Lα}·\left(\frac{0.65}{1.11}\right), μ^{\ce{Cu}}_{Lα}·\left(\frac{0.9498}{0.9297}\right), σ^{\ce{Cu}}_{Lα} \right) + G^{\ce{O}}_{Kα}\left( \frac{N^{\ce{Cu}}_{Lα}}{3.5}, μ^{\ce{Cu}}_{Lα}·\left(\frac{0.5249}{0.9297}\right), \frac{σ^{\ce{Cu}}_{Lα}}{2.0} \right) + G_{\text{unknown}}\) |

| Cu | EPIC | 0.9 | \(G^{\ce{O}}_{Kα} + G^{\ce{C}}_{Kα}\left( \frac{N^{\ce{O}}_{Kα}}{10.0}, μ^{\ce{O}}_{Kα}·\left(\frac{277.0}{524.9}\right), σ^{\ce{O}}_{Kα} \right) + G_{\text{unknown}}\) |

| C | EPIC | 0.6 | \(G^{\ce{C}}_{Kα} + G^{\ce{O}}_{Kα}\left( μ^{\ce{C}}_{Kα}·\left(\frac{0.525}{0.277}\right), σ^{\ce{C}}_{Kα} \right)\) |

\normalsize

12.2.3.1. Notes on implementation details of fit functions extended

[ ]REWRITE THE BELOW (much of that is irrelevant for the full thesis) -> Place into :noexport: section!

The exact implementation in use for both the gaussian:

The fitting was performed both with MPFit (Levenberg Marquardt C implementation) as a comparison, but mainly using NLopt (via https://github.com/Vindaar/nimnlopt). Specifically the gradient based "Method of Moving Asymptotes" algorithm was used (NLopt provides a large number of different minimization / maximization algorithms to choose from) to perform maximum likelihood estimation written in the form of a poisson distributed log likelihood \(\chi^2\):

where \(n_i\) is the number of events in bin \(i\) and \(y_i\) the model prediction of events in bin \(i\).

The required gradient was calculated simply using the symmetric derivative. Other algorithms and minimization functions were tried, but this proved to be the most reliable. See the implementation: https://github.com/Vindaar/TimepixAnalysis/blob/master/Analysis/ingrid/calibration.nim#L131-L162

12.2.3.2. Pixel spectra extended

In case of the pixel spectra the fit functions are generally very similar, but in some cases the regular Gaussian is replaced by an 'exponential Gaussian' defined as follows:

where the constant \(c\) is chosen such that the resulting function is continuous. The idea being that in the pixel spectra can have a longer exponential tail on the left side due to threshold effects and multiple electrons entering a single grid hole.

The implementation of the exponential Gaussian is found here: https://github.com/Vindaar/TimepixAnalysis/blob/master/Analysis/ingrid/calibration.nim#L182-L194

[ ]REPLACE LINKS SUCH AS THESE BY TAGGED VERSION AND NOT DIRECT INLINE LINKS

The full list of all fit combinations for the pixel spectra is shown in tab. 23. The fitting otherwise works the same way, using a non-linear least square fit both implemented by hand using MMA as well as a standard Levenberg-Marquardt fit.

| Target | Filter | HV [kV] | Fit functions |

|---|---|---|---|

| Cu | Ni | 15 | \(EG^{\ce{Cu}}_{Kα} + EG^{\ce{Cu}, \text{esc}}_{Kα}\) |

| Mn | Cr | 12 | \(EG^{\ce{Mn}}_{Kα} + G^{\ce{Mn}, \text{esc}}_{Kα}\) |

| Ti | Ti | 9 | \(EG^{\ce{Ti}}_{Kα} + G^{\ce{Ti}, \text{esc}}_{Kα} + G^{\ce{Ti}, \text{esc}}_{Kβ}\left( eμ^{\ce{Ti}}_{Kα}·(\frac{1.959}{4.511}), σ^{\ce{Ti}, \text{esc}}_{Kα} \right) + G^{\ce{Ti}}_{Kβ}\left( eμ^{\ce{Ti}}_{Kα}·(\frac{4.932}{4.511}), eσ^{\ce{Ti}}_{Kα} \right)\) |

| Ag | Ag | 6 | \(EG^{\ce{Ag}}_{Lα} + G^{\ce{Ag}}_{Lβ}\left( eN^{\ce{Ag}}_{Lα}·0.56, eμ^{\ce{Ag}}_{Lα}·(\frac{3.151}{2.984}), eσ^{\ce{Ag}}_{Lα} \right)\) |

| Al | Al | 4 | \(EG^{\ce{Al}}_{Kα}\) |

| Cu | EPIC | 2 | \(G^{\ce{Cu}}_{Lα} + G^{\ce{Cu}}_{Lβ}\left( N^{\ce{Cu}}_{Lα}·(\frac{0.65}{1.11}), μ^{\ce{Cu}}_{Lα}·(\frac{0.9498}{0.9297}), σ^{\ce{Cu}}_{Lα} \right) + G^{\ce{O}}_{Kα}\left( \frac{N^{\ce{Cu}}_{Lα}}{3.5}, μ^{\ce{Cu}}_{Lα}·(\frac{0.5249}{0.9297}), \frac{σ^{\ce{Cu}}_{Lα}}{2.0} \right) + G_{\text{unknown}}\) |

| Cu | EPIC | 0.9 | \(G^{\ce{O}}_{Kα} + G_{\text{unknown}}\) |

| C | EPIC | 0.6 | \(G^{\ce{C}}_{Kα} + G^{\ce{O}}_{Kα}\left( μ^{\ce{C}}_{Kα}·(\frac{0.525}{0.277}), σ^{\ce{C}}_{Kα} \right)\) |

12.2.3.3. Generate plot of charge spectrum extended

See sec. 12.2.2.4 for the commands used to generate all the plots for the pixel and charge spectra, including the one used in the above section.

12.2.4. Overview of CDL data in energy

With the fits to the charge spectra performed on a run-by-run basis, they can be utilized to calibrate the energy of each cluster in the data. 6 This is done by using the linear relationship between charge and energy and therefore using the charge of the main fluorescence line as computed from the fit. Each run is therefore self-calibrated (in contrast to our normal energy calibration approach 11.1). Fig. 6 shows normalized histograms of all CDL data after applying basic cuts and performing said energy calibrations.

12.2.5. Definition of the reference distributions

Having performed fits to all charge spectra of each run and the position of the main fluorescence line and its width determined, the reference distributions for the cluster properties entering the likelihood can be computed. By taking all clusters within the \(3σ\) bounds around the main fluorescence line of the data used for the fit, the dataset is selected. This guarantees to leave mainly X-rays of the targeted energy for each line in the dataset. As the fit is performed for each run separately, the \(3σ\) charge cut is performed run by run and then all data combined for each fluorescence line (target, filter & HV setting).

The desired reference distributions then are simply the normalized histograms of the clusters in each of the properties. With 8 targeted fluorescence lines and 3 properties this yields a total of 24 reference distributions. Each histogram is then interpreted as a probability density function (PDF) for clusters 'matching' (more on this in sec. 12.2.6) the energy of its fluorescence line. An overview of all the reference distributions is shown in fig. 7. We can see that all distributions tend to get wider towards lower energies (towards the top of the plot). This is expected and due to smaller clusters having less primary electrons and therefore statistical variations in geometric properties playing a more important role. 7 The binning shown in the figure is the exact binning used to define the PDF. In case of the fraction of pixels within a transverse RMS radius, bins with significantly higher counts are observed at low energies. This is not a binning artifact, but a result of the definition of the variable. The property computes the fraction of pixels that lie within a circle of the radius corresponding to the transverse RMS of the cluster around the cluster center (see fig. #fig:reco:property_explanations). At energies with few pixels in total, the integer nature of \(N\) or \(N+1\) primary electrons (active pixels) inside the radius becomes apparent.

The binning in the histograms is chosen by hand such that the binning is as fine as possible without leading to significant statistical fluctuations, as those would have a direct effect on the PDFs leading to unphysical effects on the probabilities. Ideally an approach of either an automatic bin selection algorithm or something like a kernel density estimation should be used. However, the latter is slightly problematic due to the integer effects in the low energies of the fraction in transverse RMS variable.

The summarized 'recipe' of the approach is therefore:

- apply cleaning cuts according to tab. 20,

- perform fits according to tab. 22,

- cut to the \(3σ\) region around the main fluorescence line of the performed fit (i.e. the first term in tab. 22),

- combine all remaining clusters for the same fluorescence line from each run,

- compute a histogram for each desired cluster property and each fluorescence line,

- normalize the histogram to define the reference distribution, \(\mathcal{P}_i\)

12.2.5.1. Generate ridgeline plot of all reference distributions extended

The ridgeline plot used in the above section is produced using

TimepixAnalysis/Plotting/plotCdl. See

sec. 12.2.6.1 for the exact commands as

more plots are produced used in other sections.

12.2.5.2. Generate plots for interpolated likelihood distribution and logL variable correlations [/] extended

In the main text I mentioned that the \(\ln\mathcal{L}\) variables are all very correlated. Let's create a few plots to look at how correlated they actually are. We'll generate scatter plots of the three variables against another, using a color scale for the third variable.

[ ]WRITE A FEW WORDS ABOUT THE PLOTS![X]Create a plot of the likelihood distributions interpolated over all energies! Well, it doesn't work in the way I thought, because obviously we cannot just compute the likelihood distributions directly! We can compute a likelihood value for a cluster at an arbitrary energy, but to get a likelihood distribution we'd need actual X-ray data at all energies! The closest we have to that is the general CDL data (not just the main peak & using correct energies for each cluster) -> Attempt to use the CDL data with only the cleaning cuts -> Question: Which tool do we add this to? Or separate here using CDL reconstructed data?

How much statistics do we even have if we end up splitting everything over 1000 energies? Like nothing… Done in:

now where we compare the no morphing vs. the linear morphing case. Much more interesting than I would have thought, because one can clearly see the effect of the morphing on the logL values that are computed! This is a pretty nice result to showcase the morphing is actually useful. Will be put into appendix and linked.

[X]ADD LINE SHOWING WHERE THE CUT VALUES ARE TO THE PLOT -> The hard corners in the interpolated data is because the reference distributions are already pre-binned of course! So if by just changing the bins slightly the ε cut value still lies in the same bin, of course we see a 'straight' line in energy. Hence a non smooth curve. We could replace this all:- either by a smooth KDE and interpolate based on that as an alternative. I should really try this, the only questionable issue is the fracRms distribution and its discrete features. However we don't actually guarantee in any way that in current approach the bins actually correspond to any fixed integer values. It is quite likely that the bins that show smaller/larger values are too wide/small!

- or by keeping everything as is, but then performing a spline interpolation on the distinct (logL, energy) pairs such that the result is a smooth logL value. "Better bang for buck" than doing full KDE and avoids the issue of discreteness in the fracRms distribution. Even though the interpolated values probably correspond to something as if the fracRms distribution had been smooth.

import std / [os, strutils]

import ingrid / ingrid_types

import ingrid / private / [cdl_utils, cdl_cuts, hdf5_utils, likelihood_utils]

import pkg / [ggplotnim, nimhdf5]

const TpxDir = "/home/basti/CastData/ExternCode/TimepixAnalysis"

const cdl_runs_file = TpxDir / "resources/cdl_runs_2019.org"

const fname = "/home/basti/CastData/data/CDL_2019/CDL_2019_Reco.h5"

const cdlFile = "/home/basti/CastData/data/CDL_2019/calibration-cdl-2018.h5"

const dsets = @[ "totalCharge", "eccentricity", "lengthDivRmsTrans", "fractionInTransverseRms" ]

proc calcEnergyFromFits (df: DataFrame, fit_μ: float, tfKind: TargetFilterKind ): DataFrame =

## Given the fit result of this data type & target/filter combination compute the energy

## of each cluster by using the mean position of the main peak and its known energy

result = df

result [ "Target" ] = $tfKind

let invTab = getInverseXrayRefTable()

let energies = getXrayFluorescenceLines()

let lineEnergy = energies[invTab[$tfKind] ]

result = result.mutate(f{ float: "energy" ~ `totalCharge` / fit_μ * lineEnergy} )

let h5f = H5open (fname, "r" )

var df = newDataFrame()

for tfKind in TargetFilterKind:

for (run, grp) in tfRuns(h5f, tfKind, cdl_runs_file):

var dfLoc = newDataFrame()

for dset in dsets:

if dfLoc.len == 0:

dfLoc = toDf( { dset : h5f.readCutCDL(run, 3, dset, tfKind, float64 ) } )

else:

dfLoc[dset] = h5f.readCutCDL(run, 3, dset, tfKind, float64 )

dfLoc[ "runNumber" ] = run

dfLoc[ "tfKind" ] = $tfKind

# calculate energy from fit

let fit_μ = grp.attrs[ "fit_μ", float ]

dfLoc = dfLoc.calcEnergyFromFits(fit_μ, tfKind)

df.add dfLoc

proc calcInterp (ctx: LikelihoodContext, df: DataFrame ): DataFrame =

# walk all rows

# feed ecc, ldiv, frac into logL and return a DF with

result = df.mutate(f{ float: "logL" ~ ctx.calcLikelihoodForEvent(`energy`,

`eccentricity`,

`lengthDivRmsTrans`,

`fractionInTransverseRms`)

} )

# first make plots of 3 logL variables to see their correlations

ggplot(df, aes( "eccentricity", "lengthDivRmsTrans", color = "fractionInTransverseRms" ) ) +

geom_point(size = 1.0 ) +

ggtitle( "lnL variables of all (cleaned) CDL data for correlations" ) +

ggsave( "~/phd/Figs/background/correlation_ecc_ldiv_frac.pdf", dataAsBitmap = true )

ggplot(df, aes( "eccentricity", "fractionInTransverseRms", color = "lengthDivRmsTrans" ) ) +

geom_point(size = 1.0 ) +

ggtitle( "lnL variables of all (cleaned) CDL data for correlations" ) +

ggsave( "~/phd/Figs/background/correlation_ecc_frac_ldiv.pdf", dataAsBitmap = true )

ggplot(df, aes( "lengthDivRmsTrans", "fractionInTransverseRms", color = "eccentricity" ) ) +

geom_point(size = 1.0 ) +

ggtitle( "lnL variables of all (cleaned) CDL data for correlations" ) +

ggsave( "~/phd/Figs/background/correlation_ldiv_frac_ecc.pdf", dataAsBitmap = true )

df = df.filter(f{`eccentricity` < 2.5 } )

ggplot(df, aes( "eccentricity", "lengthDivRmsTrans", color = "fractionInTransverseRms" ) ) +

geom_point(size = 1.0 ) +

ggtitle( "lnL variables of all (cleaned) CDL data for correlations (ε < 2.5)" ) +

ggsave( "~/phd/Figs/background/correlation_ecc_ldiv_frac_ecc_smaller_2_5.pdf", dataAsBitmap = true )

ggplot(df, aes( "eccentricity", "fractionInTransverseRms", color = "lengthDivRmsTrans" ) ) +

geom_point(size = 1.0 ) +

ggtitle( "lnL variables of all (cleaned) CDL data for correlations (ε < 2.5)" ) +

ggsave( "~/phd/Figs/background/correlation_ecc_frac_ldiv_ecc_smaller_2_5.pdf", dataAsBitmap = true )

ggplot(df, aes( "lengthDivRmsTrans", "fractionInTransverseRms", color = "eccentricity" ) ) +

geom_point(size = 1.0 ) +

ggtitle( "lnL variables of all (cleaned) CDL data for correlations (ε < 2.5)" ) +

ggsave( "~/phd/Figs/background/correlation_ldiv_frac_ecc_ecc_smaller_2_5.pdf", dataAsBitmap = true )

from std/sequtils import concat

# now generate the plot of the logL values for all cleaned CDL data. We will compare the

# case of no morphing with the linear morphing case

proc getLogL (df: DataFrame, mk: MorphingKind ): ( DataFrame, DataFrame ) =

let ctx = initLikelihoodContext(cdlFile, yr2018, crGold, igEnergyFromCharge, Timepix1, mk, useLnLCut = true )

var dfMorph = ctx.calcInterp(df)

dfMorph[ "Morphing?" ] = $mk

let cutVals = ctx.calcCutValueTab()

case cutVals.morphKind

of mkNone:

let lineEnergies = getEnergyBinning()

let tab = getInverseXrayRefTable()

var cuts = newSeq [ float ]()

var energies = @[ 0.0 ]

var lastCut = Inf

var lastE = Inf

for k, v in tab:

let cut = cutVals[k]

if classify(lastCut) != fcInf:

cuts.add lastCut

energies.add lastE

cuts.add cut

lastCut = cut

let E = lineEnergies[v]

energies.add E

lastE = E

cuts.add cuts[^1 ] # add last value again to draw line up

echo energies.len, " vs ", cuts.len

let dfCuts = toDf( {energies, cuts, "Morphing?" : $cutVals.morphKind} )

result = (dfCuts, dfMorph)

of mkLinear:

let energies = concat(@[ 0.0 ], cutVals.lnLCutEnergies, @[ 20.0 ] )

let cutsSeq = cutVals.lnLCutValues.toSeq1D

let cuts = concat(@[cutVals.lnLCutValues[ 0 ] ], cutsSeq, @[cutsSeq[^1 ] ] )

let dfCuts = toDf( { "energies" : energies, "cuts" : cuts, "Morphing?" : $cutVals.morphKind} )

result = (dfCuts, dfMorph)

var dfMorph = newDataFrame()

let (dfCutsNone, dfNone) = getLogL(df, mkNone)

let (dfCutsLinear, dfLinear) = getLogL(df, mkLinear)

dfMorph.add dfNone

dfMorph.add dfLinear

var dfCuts = newDataFrame()

dfCuts.add dfCutsNone

dfCuts.add dfCutsLinear

# dfCuts.showBrowser()

echo dfMorph

ggplot(dfMorph, aes( "logL", "energy", color = factor( "Target" ) ) ) +

facet_wrap( "Morphing?" ) +

geom_point(size = 1.0 ) +

geom_line(data = dfCuts, aes = aes( "cuts", "energies" ) ) + # , color = "Morphing?")) +

ggtitle(r"$\ln\mathcal{L}$ values of all (cleaned) CDL data against energy" ) +

themeLatex(fWidth = 0.9, width = 600, baseTheme = singlePlot) +

ylab( "Energy [keV]" ) + xlab(r"$\ln\mathcal{L}$" ) +

ggsave( "~/phd/Figs/background/logL_of_CDL_vs_energy.pdf", width = 1000, height = 600, dataAsBitmap = true )

12.2.6. Definition of the likelihood distribution

With our reference distributions defined it is time to look back at the equation for the definition of the likelihood, eq. \eqref{eq:background:likelihood_def}.

Note: To avoid numerical issues dealing with very small probabilities, the actual relation evaluated numerically is the negative log of the likelihood:

\[ -\ln \mathcal{L}(ε, f, l) = - \ln \mathcal{P}_ε(ε) - \ln \mathcal{P}_f(f) - \ln \mathcal{P}_l(l). \]

By considering a single fluorescence line, the three reference distributions \(\mathcal{P}_i(i)\) make up the likelihood \(\mathcal{L}(ε, l, f)\) function for that energy; a function of the variables \(ε, f, l\). In order to use the likelihood function as a classifier for X-ray like clusters we need a one dimensional expression. This is where the likelihood distribution \(\mathfrak{L}\) comes in. We reuse all the clusters used to define the reference distributions \(\mathcal{P}_{ε,f,l}\) and compute their likelihood values \(\{ \mathcal{L}_j \}\), where \(j\) is the index of the \(j\text{-th}\) cluster. By then computing the histogram of the set of all these likelihood values, we obtain the likelihood distribution

\[ \mathfrak{L} = \text{histogram}\left( \{ \mathcal{L}_j \} \right). \]

All these distributions are shown in fig. 8 as negative log likelihood distributions. 8 We see that the distributions change slightly in shape and move towards larger \(-\ln \mathcal{L}\) values towards the lower energy target/filter combinations. The shape change is most notably due to the significant integer nature of the 'fraction in transverse RMS' \(f\) variable, as seen in fig. 7. The shift to larger values expresses that the reference distributions \(\mathcal{P}_i\) become wider and thus each bin has lower probability.

To finally classify events as signal or background using the likelihood distribution, one sets a desired "software efficiency" \(ε_{\text{eff}}\), which is defined as:

\begin{equation} \label{eq:background:lnL:cut_condition} ε_{\text{eff}} = \frac{∫_0^{\mathcal{L'}} \mathfrak{L}(\mathcal{L}) \, \mathrm{d}\mathcal{L}}{∫_0^{∞}\mathfrak{L}(\mathcal{L}) \, \mathrm{d} \mathcal{L}}. \end{equation}The likelihood value \(\mathcal{L}'\) is the value corresponding to the \(ε_{\text{eff}}^{\text{th}}\) percentile of the likelihood distribution \(\mathfrak{L}\). In practical terms one computes the normalized cumulative sum of the log likelihood and searches for the point at which the desired \(ε_{\text{eff}}\) is reached. The typical software efficiency we aim for is \(\SI{80}{\percent}\). Classification as X-ray-like then is simply any cluster with a \(-\ln\mathcal{L}\) value smaller than the cut value \(-\ln\mathcal{L}'\). Note that this value \(\mathcal{L}'\) has to be determined for each likelihood distribution.

To summarize the derivation of the likelihood distribution \(\mathfrak{L}\) and its usage as a classifier as a 'recipe':

- compute the reference distributions \(\mathcal{P}_i\) as described in sec. 12.2.5,

- take the raw cluster data (unbinned data!) of those clusters that define the \(\mathcal{P}_i\) and feed each of these into eq. \eqref{eq:background:likelihood_def} for a single likelihood value \(\mathcal{L}_i\) each,

- compute the histogram of the set of all these likelihood values \(\{ \mathcal{L}_i \}\) to define the likelihood distribution \(\mathfrak{L}\).

- define a desired 'software efficiency' \(ε_{\text{eff}}\) and compute the corresponding likelihood value \(\mathcal{L}_c\) using eq. \eqref{eq:background:lnL:cut_condition},

- any cluster with \(\mathcal{L}_i \leq \mathcal{L}_c\) is considered X-ray-like with efficiency \(ε_{\text{eff}}\).

Note that due to the usage of negative log likelihoods the raw data often contains infinities, which are just a side effect of picking up a zero probability from one of the reference distributions for a particular cluster. In reality the reference distributions should be a continuous distribution that is nowhere exactly zero. However, due to limited statistics there is a small range of non-zero probabilities (most bins are empty outside the main range). For all practical purposes this does not matter, but it does explain the rather hard cutoff from 'sensible' likelihood values to infinities in the raw data.

12.2.6.1. Generate plots of the likelihood and reference distributions extended

In order to generate plots of the reference distributions as well as

the likelihood distributions, we use the TimepixAnalysis/Plotting/plotCdl tool (adjust the

path to the calibration-cdl-2018.h5 according to your system):

ESCAPE_LATEX=true USE_TEX=true \

./plotCdl \

-c ~/CastData/data/CDL_2019/calibration-cdl-2018.h5 \

--outpath ~/phd/Figs/CDL

which generates a range of figures in the local out directory.

Among them:

- ridgeline plots for each property, as well as facet plots

- a combined ridgeline plot of all reference distributions side by side as a ridgeline each (used in the above section)

- plots of the CDL energies using

energyFromCharge - plots of the likelihood distributions. Both as a outline and ridgeline plot.

Note that this tool can also create comparisons between a background dataset and their properties and the reference data!

For now we use:

-

out/ridgeline_all_properties_side_by_side.pdf -

out/eccentricity_facet_calibration-cdl-2018.h5.pdf -

out/fractionInTransverseRms_facet_calibration-cdl-2018.h5.pdf -

out/lengthDivRmsTrans_facet_calibration-cdl-2018.h5.pdf -

out/logL_ridgeline.pdf

which also all live in phd/Figs/CDL.

12.2.7. Energy interpolation of likelihood distributions

For the GridPix detector used in 2014/15, similar X-ray tube data was taken and each of the 8 X-ray tube energies were assigned an energy interval. The likelihood distribution to use for each cluster was chosen based on which energy interval the cluster's energy falls into. This leads to discontinuities of the properties at the interval boundaries due to the energy dependence of the cluster properties as seen in fig. 1(b). This change is of course continuous instead of discrete. It can then lead to jumps in the efficiency of the background suppression method and thus in the achieved background rate. It seems a safe assumption that the reference distributions undergo a continuous change for changing energies of the X-rays. Therefore, to avoid discontinuities we perform a linear interpolation for each cluster with energy \(E_β\) between the closest two neighboring X-ray tube energies \(E_α\) and \(E_γ\) in each probability density \(\mathcal{P}_i\) at the cluster's properties. With \(ΔE = E_α - E_γ\) the difference in energy between the closest two X-ray tube energies, each probability density is then interpolated to:

\[ \mathcal{P}_i(E_β, x_i) = \left(1 - \frac{|E_β - E_α|}{ΔE}\right) · \mathcal{P}_i(E_α, x_i) + \left( 1 - \frac{|E_γ - E_β|}{ΔE} \right) · \mathcal{P}_i(E_γ, x_i) . \]

Each probability density of the closest neighbors is evaluated at the cluster's property \(x_i\) and the linear interpolation weighted by the distance to each energy is computed.

The choice for a linear interpolation was made after different ideas were tried. Most importantly, a linear interpolation does not yield unphysical results (for example negative bin counts in interpolated data, which can happen in a spline interpolation) and yields very good results in the cases that can be tested, namely reconstructing a known likelihood distribution \(B\) by doing a linear interpolation between the two outer neighbors \(A\) and \(C\). 9(a) shows this idea using the fraction in transverse RMS variable. This variable is shown here as it has the strongest obvious shape differences going from line to line. The green histogram corresponds to interpolated bins based on the difference in energy between the line above and below the target (hence it is not done for the outer lines, as there is no 'partner' above / below). Despite the interpolation covering an energy range almost twice as large as used in practice, over- or underestimation of the interpolation (green) is minimal. To give an idea of what this results in for the interpolation, fig. 9(b) shows a heatmap of the same variable showing how the interpolation describes the energy and fraction in transverse RMS space continuously.

Given that such an interpolation works as well as it does on recovering a known line, implies that a linear interpolation on 'half' 9 the energy interval in practice should yield reasonably realistic distributions, certainly much better than allowing a discrete jump at specific boundaries.

See appendix 28 for a lot more information about the ideas considered and comparisons to the approach without interpolation. In particular fig. 140, which computes the \(\ln\mathcal{L}\) values for all of the (cleaned, cuts tab. 20 applied) CDL data comparing the case of no interpolation with interpolation showing a clear improvement in the smoothness of the point cloud.

12.2.7.1. Practical note about interpolation extended

The need to define cut values \(\mathcal{L}'\) for each likelihood distribution to have a variable to cut on is one reason why the practical implementation does not use full interpolation to compute the reference distributions on the fly each time for every individual cluster energy, but instead uses a pre-calculated high resolution mesh of \(\num{1000}\) interpolated distributions. This allows to compute the cut value as well as the distributions before starting to classify all clusters, saving significant performance. With a mesh of \(\num{1000}\) energies the largest error on the energy is \(<\SI{5}{eV}\) anyhow.

12.2.7.2. Generate plots for morphing / interpolation extended

The plots are created with TimepixAnalysis/Tools/cdlMorphing:

WRITE_PLOT_CSV=true USE_TEX=true ./cdlMorphing \

--cdlFile ~/CastData/data/CDL_2019/calibration-cdl-2018.h5 \

--outpath ~/phd/Figs/CDL/cdlMorphing/

Note: The script produces the cdl_as_raster_interpolated_*

plots. These use the terminology mtNone for "no" morphing, but that

is only because we perform the morphing 'manually' in the code using

the same function as in likelihood_utils.nim. Because the other

morphing happening in that script is one using an offset of 2 (to skip

the center line!).

12.2.8. Study of interpolation of CDL distributions [/] extended

The full study and notes about how we ended up at using a linear interpolation, can be found in appendix 28.

[X]This is now in the appendix. Good enough? Link

Put here all our studies of how and why we ended up at linear interpolation!

The actual linear interpolation results (i.e. reproducing the "middle" one will be shown in the actual thesis).

12.2.9. Energy resolution

On a slight tangent, with the main fluorescence lines fitted in all the charge spectra, the position and line width can be used to compute the energy resolution of the detector:

\[ ΔE = \frac{σ}{μ} \]

where \(σ\) is a measure of the line width and \(μ\) the position (in this case in total cluster charge). Note that in some cases the full width at half maximum (FWHM) is used and in others the standard deviation (for a normal distribution the FWHM is about \(2.35 σ\)). The energy resolutions obtained by our detector in the CDL dataset is seen in fig. 10. At the lowest energies below \(\SI{1}{keV}\) the resolution is about \(ΔE \approx \SI{30}{\%}\) and generally between \(\SIrange{10}{15}{\%}\) from \(\SI{2}{keV}\) on.

12.2.9.1. Note on energy resolutions extended

Our energy resolutions, if converted to FWHM seem to be rather bad compared to best in class gaseous detectors, for which sometimes almost as low as 10% was achieved.

12.2.9.2. Generate plot of energy resolution extended

This plot is generated as part of the cdl_spectrum_creation.nim

program. See sec. 12.2.2.4 for the commands.

12.3. Application of likelihood cut for background rate

By applying the likelihood cut method introduced in the first part of this chapter to the background data of CAST, we can extract all clusters that are X-ray-like and therefore describe the irreducible background rate. Unless otherwise stated the following plots use a software efficiency of \(\SI{80}{\%}\).

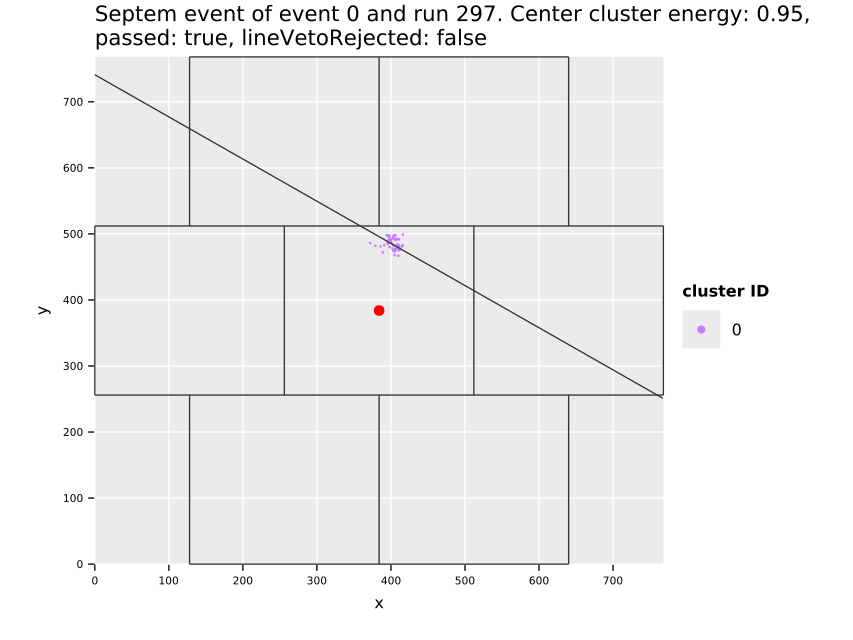

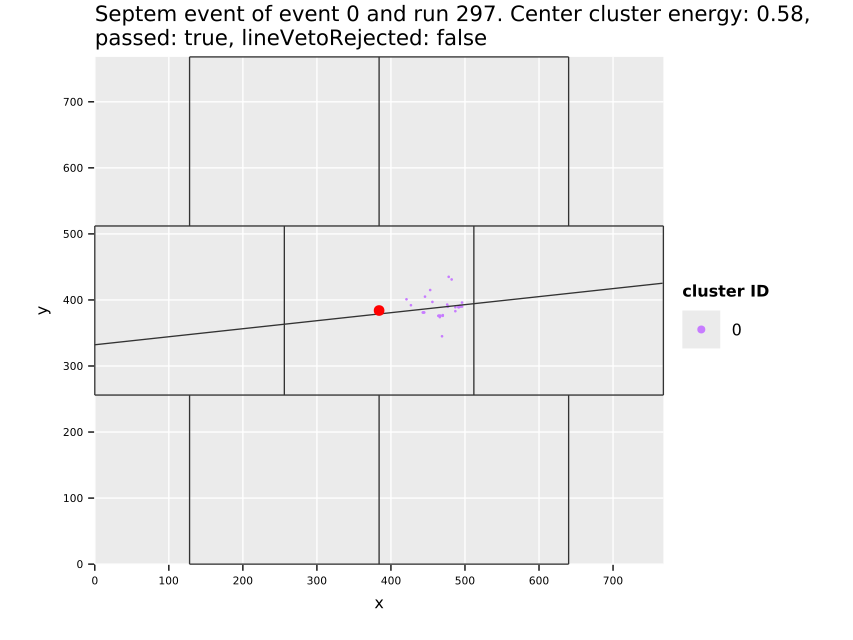

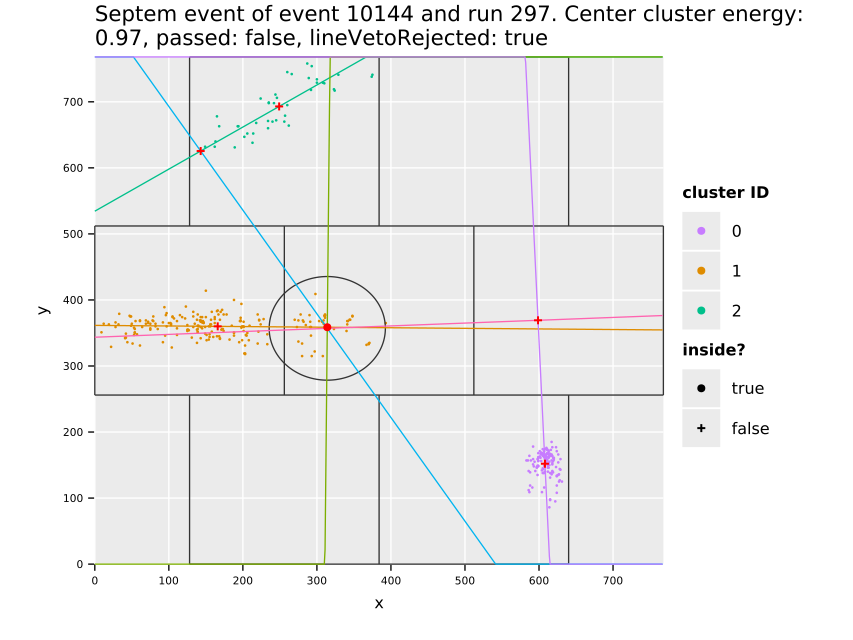

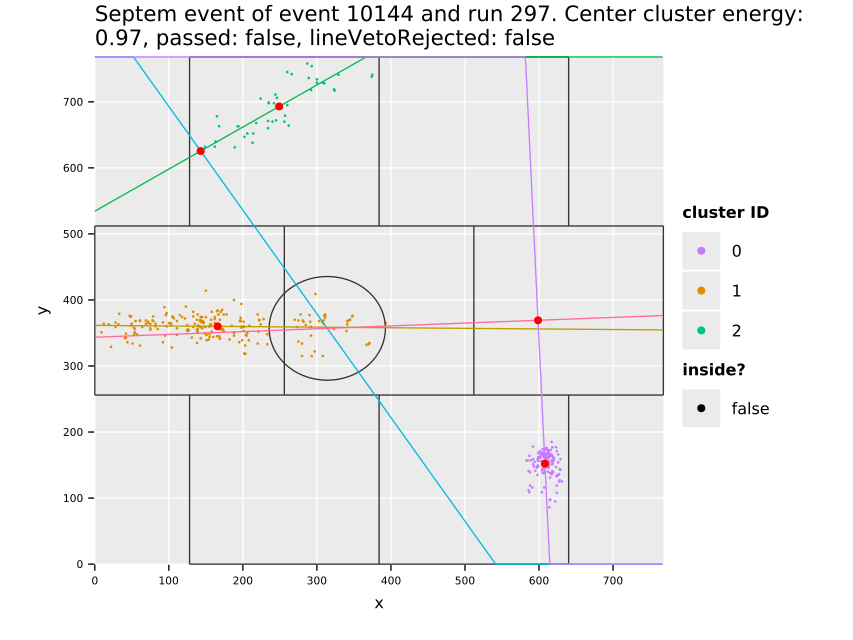

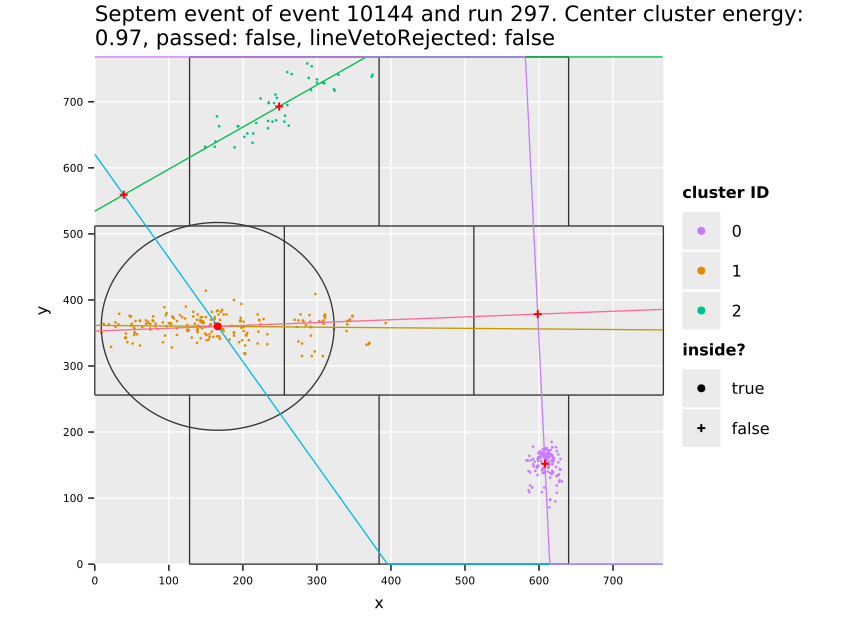

Fig. 11(a) shows the cluster centers and their distribution over the whole center GridPix, which highlights the extremely uneven distribution of background. The increase towards the edges and in particular the corners is due to events being cut off. Statistically by cutting off a piece of a track-like event, the resulting event likely becomes more spherical than before. In particular in a corner where two sides are cut off potentially (see also sec. 7.10). This is an aspect the detector vetoes help with, see sec. 12.5.3. In addition the plot shows some smaller regions of few pixel diameter that have more activity, due to minor noise. With about \(\num{74000}\) clusters left on the center chip, the likelihood cut at \(\SI{80}{\percent}\) software efficiency represents a background suppression of about a factor \(\num{20}\) (compare tab. 14, \(\sim\num{1.5e6}\) events on the center chip over the entire CAST data taking). In the regions towards the center of the chip, the suppression is of course much higher. Fig. 11(b) shows what the background suppression looks like locally when comparing the number of clusters in a small region of the chip to the total number of raw clusters that were detected. Note that this is based on the assumption that the raw data is homogeneously distributed. See appendix 29 for occupancy maps of the raw data.

The distribution of the X-ray like clusters in the background data motivate on the one hand to consider local background rates for a physics analysis and at the same time the selection of a specific region in which a benchmark background rate can be defined. For this purpose (Christoph Krieger 2018) defines different detector regions in which the background rate is computed and treated as constant. One of these, termed the 'gold region' is a square around the center of \(\SI{5}{mm}\) side length (visible as red square in fig. 11(a) and a bit less than 3x3 tiles around center in fig. 11(b)). All background rate plots unless otherwise specified in the remainder of the thesis always refer to this region of low background.

Using the \(\ln\mathcal{L}\) approach for the GridPix data taken at CAST in 2017/18 at a software efficiency of \(ε_{\text{eff}} = \SI{80}{\%}\) then, a background rate shown in fig. 12 is achieved. The average background rate between \(\SIrange{0}{8}{keV}\) in this case is \(\SI{2.12423(9666)e-05}{keV⁻¹.cm⁻².s⁻¹}\).

This is comparable to the background rate presented in (Christoph Krieger 2018), and reproduced here in in fig. 15 for the 2014/15 CAST GridPix detector. For this result no additional detector features over those available for the 2014/15 detector are used and the classification technique is essentially the same. 10 In order to improve on this background rate we will first look at a different classification technique based on artificial neural networks. Then afterwards we will go through the different detector features and discuss how they can further improve the background rate.

12.3.1. Generate background rate and cluster plot [0/2] extended

[ ]NOTE: THE PLOT WE CURRENTLY GENERATE: DOES IT USE TRACKING INFO OR NOT?[ ]EXPLAIN AND SHOW HOW TO INSERT TRACKING INFO

./cast_log_reader tracking \

-p ../resources/LogFiles/tracking-logs \

--startTime 2018/05/01 \

--endTime 2018/12/31 \

--h5out ~/CastData/data/DataRuns2018_Reco.h5 \

--dryRun

With the dryRun option you are only presented with what would be

written. Run without to actually add the data.

To generate the background rate plot we need:

- the reconstructed data files for the background data at CAST

- (optional for this part): the slow control and tracking logs of CAST

- the tracking information added to the reconstructed background

data files to compute the background rate only for tracking or

only for signal candidate data, done by running the

cast_log_readerwith the reconstructed data files as input

- the reconstructed CDL data and the

calibration-cdl-2018.h5file generated from it for the reference and likelihood distributions

To compute the background rate using these inputs we use the

likelihood tool first in order to apply the likelihood cut method

according to a desired software efficiency defined in the

config.toml file. Afterwards we can use another tool to generate the

plot of the background rate (which takes care of scaling the data to a

rate etc.).

The relevant section of the config.toml file should look like this:

[Likelihood]

# the signal efficiency to be used for the logL cut (percentage of X-rays of the

# reference distributions that will be recovered with the corresponding cut value)

signalEfficiency = 0.8

# the CDL morphing technique to be used (see `MorphingKind` enum), none or linear

morphingKind = "Linear"

# clustering algorithm for septem veto

clusterAlgo = "dbscan" # choose from {"default", "dbscan"}

# the search radius for the cluster finding algorithm in pixel

searchRadius = 50 # for default clustering algorithm

epsilon = 65 # for DBSCAN algorithm

[CDL]

# whether to fit the CDL spectra by run or by target/filter combination.

# If `true` the resulting `calibration-cdl*.h5` file will contain sub groups

# for each run in each target/filter combination group!

fitByRun = true

(linear morphing, 80% efficiency, and CDL based on fits per run)

In total we'll want the following files:

- for years 2017 & 2018:

- chip region crAll & crGold:

- no vetoes

- scinti veto

- FADC veto

- septem veto

- line veto

- chip region crAll & crGold:

[X]USEcreateAllLikelihoodCombinationsfor it after short rewrite

In order to generate all likelihood output files (after having

computed the likelihood datasets in the data files and added the

tracking information!) we will use the

createAllLikelihoodCombinations tool (rename please)

[ ]INSERT TRACKING INFORMATION AND ADD THE--trackingFLAG TOLIKELIHOOD[ ]RERUN THE BELOW AND CHANGE PATHS TO DIRECTLY IN PHD DIRECTORY!

- UPDATE: The current files we will likely use are generated by:

All standard variants including the different FADC percentiles:

./createAllLikelihoodCombinations \

--f2017 ~/CastData/data/DataRuns2017_Reco.h5 \

--f2018 ~/CastData/data/DataRuns2018_Reco.h5 \

--c2017 ~/CastData/data/CalibrationRuns2017_Reco.h5 \

--c2018 ~/CastData/data/CalibrationRuns2018_Reco.h5 \

--regions crGold --regions crAll \

--vetoSets "{fkScinti, fkFadc, fkSeptem, fkLineVeto, fkExclusiveLineVeto}" \

--fadcVetoPercentiles 0.9 --fadcVetoPercentiles 0.95 --fadcVetoPercentiles 0.99 \

--out /t/lhood_outputs_adaptive_fadc \

--cdlFile ~/CastData/data/CDL_2019/calibration-cdl-2018.h5 \

--multiprocessing

The septem + line variants without the FADC:

./createAllLikelihoodCombinations \

--f2017 ~/CastData/data/DataRuns2017_Reco.h5 \

--f2018 ~/CastData/data/DataRuns2018_Reco.h5 \

--c2017 ~/CastData/data/CalibrationRuns2017_Reco.h5 \

--c2018 ~/CastData/data/CalibrationRuns2018_Reco.h5 \

--regions crGold --regions crAll \

--vetoSets "{+fkScinti, fkSeptem, fkLineVeto, fkExclusiveLineVeto}" \

--out /t/lhood_outputs_adaptive_fadc \

--cdlFile ~/CastData/data/CDL_2019/calibration-cdl-2018.h5 \

--multiprocessing

and the lnL cut efficiency variants for 70 and 90% for the septem + line + FADC@90% variants:

./createAllLikelihoodCombinations \

--f2017 ~/CastData/data/DataRuns2017_Reco.h5 \

--f2018 ~/CastData/data/DataRuns2018_Reco.h5 \

--c2017 ~/CastData/data/CalibrationRuns2017_Reco.h5 \

--c2018 ~/CastData/data/CalibrationRuns2018_Reco.h5 \

--regions crGold --regions crAll \

--signalEfficiency 0.7 --signalEfficiency 0.9 \

--vetoSets "{+fkScinti, +fkFadc, +fkSeptem, fkLineVeto}" \

--fadcVetoPercentile 0.9 \

--out /t/lhood_outputs_adaptive_fadc \

--cdlFile ~/CastData/data/CDL_2019/calibration-cdl-2018.h5 \

--multiprocessing \

--dryRun

which for the time being are here: ./../org/resources/lhood_limits_automation_correct_duration/

cd $TPA/Analysis

./createAllLikelihoodCombinations --f2017 ~/CastData/data/DataRuns2017_Reco.h5 \

--f2018 ~/CastData/data/DataRuns2018_Reco.h5 \

--c2017 ~/CastData/data/CalibrationRuns2017_Reco.h5 \

--c2018 ~/CastData/data/CalibrationRuns2018_Reco.h5 \

--regions crGold \

--regions crAll \

--vetoes fkNoVeto \

--vetoes fkScinti \

--vetoes fkFadc \

--vetoes fkSeptem \

--vetoes fkLineVeto \

--vetoes fkExclusiveLineVeto \

--out ~/phd/resources/background/autoGen \

--cdlFile ~/CastData/data/CDL_2019/calibration-cdl-2018.h5 \

--multiprocessing \

--dryRun

using the --dryRun option first to see the generated commands that

will be run.

We will run this over night now using the new

default eccentricity cutoff for the line veto of 1.5 (motivated by the

ratio of fraction passing real over fake!) as well as the

lvRegularNoHLC line veto kind (although this should not matter, as

we won't use the line veto without the septem veto!). The files are

now in ./resources/background/autoGen/.

[ ]WARNING: THE GENERATED FILES HERE STILL USE OUR HACKED IN CHANGED NUMBER OF BINS FOR THE REFERENCE DISTRIBUTIONS! -> TWICE AS MANY BINS!

[ ]THESE ARE OUTDATED. WE USE OUR TOOL

First let's apply the likelihood tool to the 2017 data for the gold region:

likelihood -f ~/CastData/data/DataRuns2017_Reco.h5 --computeLogL \

--region crGold \

--cdlYear 2018 \

--h5out /home/basti/phd/resources/background/lhood_2017_crGold_80eff_no_vetoes.h5 \

--cdlFile ~/CastData/data/CDL_2019/calibration-cdl-2018.h5

and the same for the end of 2018 data:

likelihood -f ~/CastData/data/DataRuns2018_Reco.h5 --computeLogL \

--region crGold \

--cdlYear 2018 \

--h5out /home/basti/phd/resources/background/lhood_2018_crGold_80eff_no_vetoes.h5 \

--cdlFile ~/CastData/data/CDL_2019/calibration-cdl-2018.h5

and now the same for the whole chip:

likelihood -f ~/CastData/data/DataRuns2017_Reco.h5 --computeLogL \

--region crAll \

--cdlYear 2018 \

--h5out /home/basti/phd/resources/background/lhood_2017_crAll_80eff_no_vetoes.h5 \

--cdlFile ~/CastData/data/CDL_2019/calibration-cdl-2018.h5

and the same for the end of 2018 data:

likelihood -f ~/CastData/data/DataRuns2018_Reco.h5 --computeLogL \

--region crAll \

--cdlYear 2018 \

--h5out /home/basti/phd/resources/background/lhood_2018_crAll_80eff_no_vetoes.h5 \

--cdlFile ~/CastData/data/CDL_2019/calibration-cdl-2018.h5

With this we can first create the plot of the cluster centers from the

all chip files using plotClusterCenters:

plotBackgroundClusters \

~/org/resources/lhood_lnL_17_11_23_septem_fixed/lhood_c18_R2_crAll_sEff_0.8_lnL.h5 \

~/org/resources/lhood_lnL_17_11_23_septem_fixed/lhood_c18_R3_crAll_sEff_0.8_lnL.h5 \

--zMax 30 \

--title "X-ray like clusters of CAST data" \

--outpath ~/phd/Figs/backgroundClusters/ \

--showGoldRegion \

--backgroundSuppression \

--energyMin 0.2 --energyMax 12.0 \

--suffix "_lnL80_no_vetoes" \

--filterNoisyPixels \

--useTikZ

From the logL output files created by

createAllLikelihoodCombinations, let's now create the 'classical'