13. Limit calculation Limit

In this chapter we will introduce a generic limit calculation method, which can be used to compute limits on different axion or ALP coupling constants.

The first part of this chapter focuses on the more theoretical and conceptual aspects of our limit calculation methods. The second half discusses our inputs in detail and shows our expected and observed limits.

We will start with an introduction of the method itself, a Bayesian extended likelihood approach, sec. 13.1. Step by step we will introduce the likelihood function we use (sec. 13.2), what the individual pieces are and how likelihood values are computed (sec. 13.3) and how a limit is computed from such a likelihood function, sec. 13.4. Then we introduce our approach to compute an expected limit by sampling toy candidates 1, sec. 13.5. After this we will extend our approach in sec. 13.6 to include systematic uncertainties. Due to added complexity in evaluating the thus produced likelihood function, we discuss our Markov Chain Monte Carlo (MCMC) approach to evaluate the likelihood function in sec. 13.7. This concludes the first half of the chapter. Please look into Lista's book (Lista 2023) if you would like more details about Bayesian limit calculations involving nuisance parameters. Barlow (Barlow 1993) and Cowan (Cowan 1998) are also recommended for general statistics concepts.

From here we introduce all ingredients entering the likelihood function in detail, sec. 13.10. Next we discuss our systematics, sec. 13.11, after which we explain our MCMC approach in more detail (number of chains, parameters bounds, starting parameters etc.), sec. 13.12.

At this point we can finally tie everything together and discuss the expected limits obtained for a variety of different classifier and veto choices, sec. 13.13. For the best performing setup – the one yielding the best expected limit – we present our axion candidates, 13.14. Based on these we present our observed limit in sec. 13.15. Finally, in sec. 13.16 we briefly consider two other coupling constants, the axion-photon coupling \(g_{aγ}\) and the chameleon coupling \(β_γ\).

13.1. Limit method - introduction

We start with a few words on the terminology we use and what we have in mind when we talk about 'limits'.

- Context and terminology

An experiment tries to detect a new phenomenon of the kind where you expect very little signal compared to background sources. We have a dataset in which the experiment is sensitive to the phenomenon, another dataset in which it is not sensitive and finally a theoretical model of our expected signal.

Any data entry (after cleaning) in the sensitive dataset is a candidate. Each candidate is drawn from a distribution of the present background plus the expected signal contribution (\(c = s + b\)). Any entry in the non sensitive dataset is background only.

- Goal

- Compute the value of a parameter (coupling constant) such that there is \(\SI{95}{\%}\) confidence that the combined hypothesis of signal and background sources are compatible with the background only hypothesis.

- Condition

Our experiment should be such that the data in some "channels" of our choice can be modeled by a Poisson distribution

\[ P_{\text{Pois}}(k; λ) = \frac{λ^k e^{-λ}}{k!}. \]

Each such channel with an expectation value of \(λ\) counts has probability \(P_{\text{Pois}}(k; λ)\) to measure \(k\) counts. Because the Poisson distribution (as written here) is a probability density function, multiple different channels can be combined to a "likelihood" for an experiment outcome by taking the product of each channel's Poisson probability

\[ \mathcal{L}(λ) = \prod_i P_{i, \text{Pois}}(k; λ) = \prod_i \frac{λ_i^{k_i} e^{-λ_i}}{k_i!}. \]

Given a set of \(k_i\) recorded counts for all different channels \(i\) with expectation value \(λ_i\) the "likelihood" gives us the literal likelihood to record exactly that experimental outcome. Note that the parameter of the likelihood function is the mean \(λ\) and not the recorded data \(k\)! The likelihood function describes the likelihood for a fixed set of data (our real measured counts) for different parameters (our signal & background models, where the background model is constant as well).

In addition, the method described in the next section is valid under the assumption that our experiment did not have a statistically significant detection in the signal sensitive dataset compared to the background dataset.

13.2. Limit method - likelihood function \(\mathcal{L}\)

The likelihood function as described in the previous section is not optimal to compute a limit for the usage with different datasets as described before. 2 For that case we want to have some kind of a "test statistic" that relates the sensitive dataset with its seen candidates to the background dataset. For practical purposes we prefer to define a statistic, which is monotonically increasing in the number of candidates (see for example (Junk 1999)). There are different choices possible, but the one we use is

\[ \mathcal{L}(s, b) = \prod_i \frac{P_{\text{pois}}(c_i; s_i + b_i)}{P_{\text{pois}}(c_i; b_i)}, \]

so the ratio of the signal plus background over the pure background hypothesis. The number \(c_i\) is the real number of measured candidates. So the numerator gives the probability to measure \(c_i\) counts in each channel \(i\) given the signal plus background hypothesis. On the other hand the denominator measures the probability to measure \(c_i\) counts in each channel \(i\) assuming only the background hypothesis.

Note: For each channel \(i\) the ratio of probabilities itself is not strictly speaking a probability density function, because the integral

\[ \int_{-∞}^{∞}Q\, \mathrm{d}x = N \neq 1, \]

with \(Q\) an arbitrary distribution. \(N\) can be interpreted as a hypothetical number of total number of counts measured in the experiment. A PDF requires this integral to be 1.

As a result the full construct \(\mathcal{L}\) of the product of these ratios is technically not a likelihood function either. It is usually referred to as an "extended likelihood function".

For all practical purposes though we will continue to treat is as a likelihood function and call it \(\mathcal{L}\) as usual.

Note the important fact that \(\mathcal{L}\) really is only a function of our signal hypothesis \(s\) and our background model \(b\). Each experimental outcome has its own \(\mathcal{L}\). This is precisely why the likelihood function describes everything about an experimental outcome (at least if the signal and background models are reasonably understood) and thus different experiments can be combined by combining them in "likelihood space" (multiplying their \(\mathcal{L}\) or adding \(\ln \mathcal{L}\) values) to get a combined likelihood to compute a limit for.

- Deriving a practical version of \(\mathcal{L}\)

The version of \(\mathcal{L}\) presented above is still quite impractical to use and the ratio of exponentials of the Poisson distributions can be simplified significantly:

\begin{align*} \mathcal{L} &= \prod_i \frac{P(c_i, s_i + b_i)}{P(c_i, b_i)} = \prod_i \frac{ \frac{(s_i + b_i)^{n_i}}{n_i!} e^{-(s_i + b_i)} }{ \frac{b_i^{n_i}}{n_i!} e^{-b_i}} \\ &= \prod_i \frac{e^{-s_i} (s_i + b_i)^{c_i}}{b_i^{c_i}} = e^{-s_\text{tot}} \prod_i \frac{(s_i + b_i)^{c_i}}{b_i^{c_i}} \\ &= e^{-s_\text{tot}} \prod_i \left(1 + \frac{s_i}{b_i} \right)^{c_i} \end{align*}This really is the heart of computing a limit with a number of \(s_{\text{tot}}\) expected events from the signal hypothesis (depending on the parameter to be studied, the coupling constant), \(c_i\) measured counts in each channel and \(s_i\) expected signal events and \(b_i\) expected background events in that channel.

As mentioned previously though, the choice of what a channel is, is completely up to us! One such choice might be binning the candidates in energy. However, there is one choice that is particularly simple and is often referred to as the "unbinned likelihood". Namely, we create channels in time such that each "time bin" is so short as to either have 0 entries (most channels) or 1 entry. This means we have a large number of channels, but because of our definition of \(\mathcal{L}\) this does not matter. All channels with 0 candidates do not contribute to \(\mathcal{L}\) (they are \(\left(1 + \frac{s_i}{b_i}\right)^0 = 1\)). As a result our expression of \(\mathcal{L}\) simplifies further to:

\[ \mathcal{L} = e^{-s_\text{tot}} \prod_i \left(1 + \frac{s_i}{b_i}\right) \]

where \(i\) is now all channels where a candidate is contained (\(c_i = 1\)).

13.2.1. Notes on more explanations extended

For more explanations on this, I really recommend to read Thomas Junk 1999

paper about mclimit, (Junk 1999). While it only covers

binned limit approaches, it is anyhow very clear in its explanations.

In general I recommend the following resources on statistics and limit calculations. Roughly in the order in which I would recommend them.

- Luca Lista's book on statistics, (Lista 2023) -> If you log in to a CERN account, you can just download it directly from Springer (I think that's the reason it works for me)

- Luca List also uploaded a 'shortened version' if you will to arxiv (Lista 2016).

- Barlow's book on statistics is still a good book, but from 1989, (Barlow 1993)

- Barlow also wrote a paper for the arxiv recently (Barlow 2019)

- Cowan's book on statistics, (Cowan 1998)

Cowan's and Barlow's books are good to check both. They mostly cover the same topics, but reading in each can be helpful. Luca Lista is my personal preference though, because it seems clearer to me. Also it's more up to date with modern methods.

For the topic here in particular, maybe also see my own notes from a few years back trying to better understand the maths behind CLS and CLs+b: ./../org/Doc/StatusAndProgress.html

13.3. Limit method - computing \(\mathcal{L}\)

Our simplified version of \(\mathcal{L}\) using very short time bins now allows to explicitly compute the likelihood for a set of parameters. Let's now look at each of the constituents \(s_{\text{tot}}\), \(s_i\) and \(b_i\) and discuss how they are computed. We will focus on the explicit case of an X-ray detector behind a telescope at CAST.

Here it is important to note that the signal hypothesis depends on the coupling constant we wish to compute a limit for, we will just call it \(g\) in the remainder (it may be \(g_{aγ}\) or \(g_{ae}\) or any other coupling constant). This is the actual parameter of \(\mathcal{L}\).

First of all the signal contribution in each channel \(s_i\). It is effectively a number of counts that one would expect within the time window of the channel \(i\). While this seems tricky given that we have not explicitly defined such a window we can:

- either assume our time interval to be infinitesimally small and give a signal rate (i.e. per second)

- or make use of the neat property that our expression only contains the ratio of \(s_i\) and \(b_i\). What this means is that we can choose our units ourselves, as long as we use the same units for \(s_i\) as for \(b_i\)!

We will use the second case and scale each candidate's signal and background contribution to the total tracking time (signal sensitive dataset length). Each parameter with a subscript \(i\) is the corresponding value that the candidate has we are currently looking at (e.g. \(E_i\) is the energy of the recorded candidate \(i\) used to compute the expected signal).

\begin{equation} \label{eq:limit_method_signal_si} s_i(g²) = f(g², E_i) · A · t · P_{a \rightarrow γ}(g²_{aγ}) · ε(E_i) · r(x_i, y_i) \end{equation}where:

- \(f(g, E_i)\) is the axion flux at energy \(E_i\) in units of \(\si{keV^{-1}.cm^{-2}.s^{-1}}\) as a function of \(g²\), sec. 13.10.2,

- \(A\) is the area of the magnet bore in \(\si{cm²}\), sec. 13.10.1,

- \(t\) is the tracking time in \(\si{s}\), also sec. 13.10.1,

- \(P_{a \rightarrow γ}\) is the conversion probability of the axion converting into a photon computed via \[ P_{a \rightarrow γ}(g²_{aγ}) = \left( \frac{g_{aγ} B L}{2} \right)² \] written in natural units (meaning we need to convert \(B\) and \(L\) into values expressed in powers of \(\si{eV}\), as discussed in sec. 4.4), sec. 13.10.3,

- \(ε(E_i)\) is the combined detection efficiency, i.e. the combination of X-ray telescope effective area, the transparency of the detector window and the absorption probability of an X-ray in the gas, sec. 13.10.4,

- \(r(x_i, y_i)\) is the expected amount of flux from solar axions after it is focused by the X-ray telescope in the readout plane of the detector at the candidate's position \((x_i, y_i)\) (this requires a raytracing model). It should be expressed as a fractional value in units of \(\si{cm^{-2}}\). See sec. 13.10.6.

As a result the units of \(s_i\) are then given in \(\si{keV^{-1}.cm^{-2}}\) with the tracking time integrated out. If one computes a limit for \(g_{aγ}\) then \(f\) and \(P\) both depend on the coupling of interest, making \(s_i\) a function of \(g⁴_{aγ}\). In case of e.g. an axion-electron coupling limit \(g_{ae}\), the conversion probability can be treated as constant (with a fixed \(g_{aγ}\)).

Secondly, the background hypothesis \(b_i\) for each channel. Its value depends on whether we assume a constant background model, an energy dependent one or even an energy plus position dependent model. In either case the main point is to evaluate that background model at the position \((x_i, y_i)\) of the candidate and energy \(E_i\) of the candidate. The value should then be scaled to the same units of as \(s_i\), namely \(\si{keV^{-1}.cm^{-2}}\). Depending on how the model is defined this might just be a multiplication by the total tracking time in seconds. We discuss this in detail in sec. 13.10.8.

The final piece is the total signal \(s_{\text{tot}}\), corresponding to the total number of counts expected from our signal hypothesis for the given dataset. This is nothing else as the integration of \(s_i\) over the entire energy range and detection area. However, because \(s_i\) implies the signal for candidate \(i\), we write \(s(E, x, y)\) to mean the equivalent signal as if we had a candidate at \((E, x, y)\)

\[ s_{\text{tot}} = ∫_0^{E_{\text{max}}} ∫_A s(E, x, y)\, \mathrm{d}E\, \mathrm{d}x\, \mathrm{d}y \]

where \(A\) simply implies integrating the full area in which \((x, y)\) is defined. The axion flux is bounded within a region much smaller than the active detection area and hence all contributions outside are 0.

13.4. Limit method - computing a limit

With the above we are now able to evaluate \(\mathcal{L}\) for a set of candidates \({c_i(E_i, x_i, y_i)}\). As mentioned before it is important to realize that \(\mathcal{L}\) is a function of the coupling constant \(g\), \(\mathcal{L}(g)\) with all other parameters effectively constant in the context of "one experiment". \(g\) is a placeholder for the parameter, in which \(\mathcal{L}\) is linear, i.e. \(g²_{ae}\) for axion-electron or \(g⁴_{aγ}\) and \(β⁴_γ\) for axion-photon and chameleon, respectively.

With this in mind the 'limit' is defined as the 95-th percentile of \(\mathcal{L}(g)\) within the physical region of \(g\). The region \(g < 0\) is explicitly ignored, as a coupling constant cannot be negative! This can be rigorously justified in Bayesian statistics by saying the prior \(π(g)\) is 0 for \(g < 0\).

We can define the limit implicitly as 3

\begin{equation} \label{eq:limit_method:limit_def} 0.95 = \frac{∫_0^{g'} \mathcal{L}(g)\, \mathrm{d}g}{∫_0^∞ \mathcal{L}(g)\, \mathrm{d}g} \end{equation}In practice the integral cannot be evaluated until infinity. Fortunately, our choice of \(\mathcal{L}\) in the first place means that the function converges to \(0\) quickly for large values of \(g\). Therefore, we only need to compute values to a "large enough" value of \(g\) to get the shape of \(\mathcal{L}(g)\). From there we can use any numerical approach (via an empirical cumulative distribution function for example) to determine the coupling constant \(g'\) that corresponds to the 95-th percentile of \(\mathcal{L}(g)\).

In an intuitive sense the limit means the following: \(\SI{95}{\percent}\) of all coupling constants that reproduce the data we measured – given our signal and background hypotheses – are smaller than \(g'\).

Fig. 1 shows an example of a likelihood function of some coupling constant. The blue area is the lower \(\SI{95}{\%}\) of the parameter space and the red area is the upper \(\SI{5}{\%}\). Therefore, the limit in this particular set of toy candidates is at the intersection of the two colors.

13.4.1. Implementing a basic limit calculation method [/] extended

The following are two examples for a basic limit calculation in code. This is to showcase the basic idea without getting lost in too many details. In terms of the main thesis, we use the first example to produce a plot to illustrate how the limit is computed via the 95% percentile.

The real code we use for the limit is found here: https://github.com/Vindaar/TimepixAnalysis/blob/master/Analysis/ingrid/mcmc_limit_calculation.nim

Simplest implementation:

- single channel

- no detection efficiencies etc., just a flux that scales with \(g²\)

- constant background (due to single channel)

- no telescope, i.e. area for signal flux is the same as for background (due to no focusing)

import unchained, math

## Assumptions:

const totalTime = 100.0.h # 100 of "tracking time"

const totalArea = 10.cm² # assume 10 cm² area (magnet bore and chip! This case has no telescope)

defUnit(cm⁻²•s⁻¹)

proc flux (g²: float ): cm⁻²•s⁻¹ =

## Dummy flux. Just the coupling constant squared · 1e-6

result = 1e-6 * (g²).cm⁻²•s⁻¹

proc totalFlux (g²: float ): float =

## Flux integrated to total time and area

result = flux(g²) * totalTime.to( Second ) * totalArea

## Assume signal and background in counts of the single channel!

## (Yes, ` signal ` is the same as ` totalFlux ` in this case)

proc signal (g²: float ): float = flux(g²) * totalTime * totalArea ## Signal only depends on coupling in this simple model

proc background (): float = 1e-6.cm⁻²•s⁻¹ * totalTime * totalArea ## Single channel, i.e. constant background

proc likelihood (g²: float, cs: int ): float = ## ` cs ` = number of candidates in the single channel

result = exp(-totalFlux(g²) ) # ` e^{-s_tot} `

result *= pow( 1 + signal(g²) / background(), cs.float )

proc poisson (k: int, λ: float ): float = λ^k * exp(-λ) / (fac(k) )

echo "Background counts = ", background(), ". Probabilty to measure 4 counts given background: ", poisson( 4, background() )

echo "equal to signal counts at g = 1: ", signal( 1.0 )

echo "Likelihood at g = 1 for 4 candidates = ", likelihood( 1.0, 4 )

## Let's plot it from 0 to 3 assuming 4 candidates

import ggplotnim

let xs = linspace( 0.0, 3.0, 100 )

let ys = xs.map_inline(likelihood(x, 4 ) )

## Compute limit, CDF@95%

import algorithm

let yCumSum = ys.cumSum() # cumulative sum

let yMax = yCumSum.max # maximum of the cumulative sum

let yCdf = yCumSum.map_inline(x / yMax) # normalize to get (empirical) CDF

let limitIdx = yCdf.toSeq1D.lowerBound( 0.95 ) # limit at 95% of the CDF

echo "Limit at : ", xs[limitIdx]

let L_atLimit = ys[limitIdx]

let df = toDf(xs, ys)

let dfLimit = df.filter(f{ float: `xs` >= xs[limitIdx] } )

echo dfLimit

ggplot(df, aes( "xs", "ys" ) ) +

xlab( "Coupling constant" ) + ylab( "Likelihood" ) +

geom_line(fillColor = "blue", alpha = 0.4 ) +

geom_line(data = dfLimit, fillColor = "red" ) +

# geom_linerange(aes = aes(x = xs[limitIdx], yMin = 0.0, yMax = L_atLimit), ) +

annotate(x = xs[limitIdx], y = L_atLimit + 0.1, text = "Limit at 95% area" ) +

ggtitle( "Example likelihood and limit" ) +

themeLatex(fWidth = 0.9, width = 600, baseTheme = singlePlot, useTeX = true ) +

ggsave( "/home/basti/phd/Figs/limit/simple_likelihood_limit_example.pdf", width = 600, height = 380 )

Background counts = 3.6. Probabilty to measure 4 counts given background: 0.1912223391751322 equal to signal counts at g = 1: 3.6 Likelihood at g = 1 for 4 candidates = 0.4371795591566811 Limit at : 1.666666666666665 DataFrame with 2 columns and 45 rows: Idx xs ys dtype: float float 0 1.667 0.1253 1 1.697 0.1176 2 1.727 0.1103 3 1.758 0.1033 4 1.788 0.09679 5 1.818 0.09062 6 1.848 0.08481 7 1.879 0.07933 8 1.909 0.07417 9 1.939 0.06932 10 1.97 0.06476 11 2 0.06047 12 2.03 0.05645 13 2.061 0.05267 14 2.091 0.04912 15 2.121 0.0458 16 2.152 0.04268 17 2.182 0.03977 18 2.212 0.03703 19 2.242 0.03448

[INFO]: No plot ratio given, using golden ratio. [INFO] TeXDaemon ready for input. shellCmd: command -v lualatex shellCmd: lualatex -output-directory /home/basti/phd/Figs/limit /home/basti/phd/Figs/limit/simplelikelihoodlimitexample.tex Generated: /home/basti/phd/Figs/limit/simplelikelihoodlimitexample.pdf

More realistic implementation, above plus:

- real solar axion flux

- TODO: (detection efficiency) (could just use fixed efficiency)

- X-ray telescope without usage of local flux information

- multiple channels in energy

import unchained, math, seqmath, sequtils, algorithm

## Assumptions:

const totalTime = 100.0.h # 100 of "tracking time"

const areaBore = π * ( 2.15 * 2.15 ).cm²

const chipArea = 5.mm * 5.mm # assume all flux is focused into an area of 5x5 mm²

# on the detector. Relevant area for background!

defUnit(GeV⁻¹)

defUnit(cm⁻²•s⁻¹)

defUnit(keV⁻¹)

defUnit(keV⁻¹•cm⁻²•s⁻¹)

## Constants defining the channels and background info

const

Energies = @[ 0.5, 1.5, 2.5, 3.5, 4.5, 5.5, 6.5, 7.5, 8.5, 9.5 ].mapIt(it.keV)

Background = @[ 0.5e-5, 2.5e-5, 4.5e-5, 4.0e-5, 1.0e-5, 0.75e-5, 0.8e-5, 3e-5, 3.5e-5, 2.0e-5 ]

.mapIt(it.keV⁻¹•cm⁻²•s⁻¹) # convert to a rate

## A possible set of candidates from ` Background · chipArea · totalTime · 1 keV `

## (1e-5 · 5x5mm² · 100h = 0.9 counts)

Candidates = @[ 0, 2, 7, 3, 1, 0, 1, 4, 3, 2 ]

proc solarAxionFlux (ω: keV, g_aγ: GeV⁻¹): keV⁻¹•cm⁻²•s⁻¹ =

# axion flux produced by the Primakoff effect in solar core

# in units of keV⁻¹•m⁻²•yr⁻¹

let flux = 2.0 * 1e18.keV⁻¹•m⁻²•yr⁻¹ * (g_aγ / 1e-12.GeV⁻¹)^2 * pow(ω / 1.keV, 2.450 ) * exp(-0.829 * ω / 1.keV)

# convert flux to correct units

result = flux.to(keV⁻¹•cm⁻²•s⁻¹)

func conversionProbability (g_aγ: GeV⁻¹): UnitLess =

## the conversion probability in the CAST magnet (depends on g_aγ)

## simplified vacuum conversion prob. for small masses

let B = 9.0.T

let L = 9.26.m

result = pow( (g_aγ * B.toNaturalUnit * L.toNaturalUnit / 2.0 ), 2.0 )

from numericalnim import simpson # simpson numerical integration routine

proc totalFlux (g_aγ: GeV⁻¹): float =

## Flux integrated to total time, energy and area

# 1. integrate the solar flux

## NOTE: in practice this integration must not be done in this proc! Only perform once!

let xs = linspace( 0.0, 10.0, 100 )

let fl = xs.mapIt(solarAxionFlux(it.keV, g_aγ) )

let integral = simpson(fl.mapIt(it.float ), # convert units to float for compatibility

xs).cm⁻²•s⁻¹ # convert back to units (integrated out ` keV⁻¹ `!)

# 2. compute final flux by "integrating" out the time and area

result = integral * totalTime * areaBore * conversionProbability(g_aγ)

## NOTE: only important that signal and background have the same units!

proc signal ( E: keV, g_aγ: GeV⁻¹): keV⁻¹ =

## Returns the axion flux based on ` g ` and energy ` E `

result = solarAxionFlux( E, g_aγ) * totalTime.to( Second ) * areaBore * conversionProbability(g_aγ)

proc background ( E: keV): keV⁻¹ =

## Compute an interpolation of energies and background

## NOTE: For simplicity we only evaluate at the channel energies anyway. In practice

## one likely wants interpolation to handle all energies in the allowed range correctly!

let idx = Energies.lowerBound( E ) # get idx of this energy

## Note: area of interest is the region on the chip, in which the signal is focused!

## This also allows us to see that the "closer" we cut to the expected axion signal on the

## detector, the less background we have compared to the *fixed* signal flux!

result = ( Background [idx] * totalTime * chipArea).to(keV⁻¹)

proc likelihood (g_aγ: GeV⁻¹, energies: seq [keV], cs: seq [ int ] ): float =

## ` energies ` = energies corresponding to each channel

## ` cs ` = each element is number of counts in that energy channel

result = exp(-totalFlux(g_aγ) ) # ` e^{-s_tot} `

for i in 0 ..< cs.len:

let c = cs[i] # number of candidates in this channel

let E = energies[i] # energy of this channel

let s = signal( E, g_aγ)

let b = background( E )

result *= pow( 1 + signal( E, g_aγ) / background( E ), c.float )

## Let's plot it from 0 to 3 assuming 4 candidates

import ggplotnim

# define coupling constants

let xs = logspace(-13, -10, 300 ).mapIt(it.GeV⁻¹) # logspace 1e-13 GeV⁻¹ to 1e-8 GeV⁻¹

let ys = xs.mapIt(likelihood(it, Energies, Candidates ) )

let df = toDf( { "xs" : xs.mapIt(it.float ), ys} )

ggplot(df, aes( "xs", "ys" ) ) +

geom_line() +

ggsave( "/tmp/energy_bins_likelihood.pdf" )

## Compute limit, CDF@95%

import algorithm

# limit needs non logspace x & y data! (at least if computed in this simple way)

let xLin = linspace( 0.0, 1e-10, 1000 ).mapIt(it.GeV⁻¹)

let yLin = xLin.mapIt(likelihood(it, Energies, Candidates ) )

let yCumSum = yLin.cumSum() # cumulative sum

let yMax = yCumSum.max # maximum of the cumulative sum

let yCdf = yCumSum.mapIt(it / yMax) # normalize to get (empirical) CDF

let limitIdx = yCdf.lowerBound( 0.95 ) # limit at 95% of the CDF

echo "Limit at : ", xLin[limitIdx]

# Code outputs:

# Limit at : 6.44645e-11 GeV⁻¹

Limit at : 6.44645e-11 GeV⁻¹

13.5. Limit method - toy candidate sets and expected limits

Assuming a constant background over some chip area with only an energy dependence, the background hypothesis can be used to draw toy candidates that can be used in place for the real candidates to compute limits. In this situation the background hypothesis can be modeled as follows:

\[ B = \{ P_{\text{Pois}}(k; λ = b_i) \: | \: \text{for all energy bins } E_i \}, \]

that is, the background is the set of all energy bins \(E_i\), where each bin content is described by a Poisson distribution with a mean and expectation value of \(λ = b_i\) counts.

To compute a set of toy candidates then, we simply iterate over all energy bins and draw a number from each Poisson distribution. This is the number of candidates in that bin for the toy. Given that we assumed a constant background over the chip area, we finally need to draw the \((x_i, y_i)\) positions for each toy candidate from a uniform distribution. 4

These sets of toy candidates can be used to compute an "expected limit". The term expected limit is usually understood to mean the median of many limits computed based on representative toy candidate sets. If \(L_{t_i}\) is the limit of the toy candidate set \(t_i\), the expected limit \(⟨L⟩\) is defined as

\[ ⟨L⟩ = \mathrm{median}( \{ L_{t_i} \} ) \]

If the number of toy candidate sets is large enough, the expected limit should prove accurate. The real limit will then be below or above with \(\SI{50}{\%}\) chance each.

13.6. Limit method - extending \(\mathcal{L}\) for systematics

The aforementioned likelihood ratio assumes perfect knowledge of the inputs for the signal and background hypotheses. In practice neither of these is known perfectly though. Each input typically has associated a small systematic uncertainty (e.g. the width of the detector window is only known up to N nanometers, the pressure in the chamber only up to M millibar, magnet length only up to C centimeters etc.). These all affect the "real" numbers one should actually calculate with. So how does one treat these uncertainties?

The basic starting point is realizing that the values we use are our "best guess" of the real value. Usually it is a reasonable approximation that the real value will likely be within some standard deviation around that best guess, following a normal distribution. Further, it is a good idea to identify all systematic uncertainties and classify them by which aspect of \(s_i\), \(b_i\) or \((x_i, y_i)\) they affect (amount of signal, background or the position 5 ). Another reasonable assumption is to combine different uncertainties of the same type by

\[ Δx = \sqrt{ \sum_{i=1}^N Δx²_i }, \]

i.e. computing the euclidean radius in N dimensions, for N uncertainties of the same type.

The above explanation can be followed to encode these uncertainties into the limit calculation. For a value corresponding to our "best guess" we want to recover the likelihood function \(\mathcal{L}\) from before. The further we get away from our "best guess", the more the likelihood function should be "penalized", meaning the actual likelihood of that parameter given our data should be lower. The initial likelihood \(\mathcal{L}\) will be modified by multiplying with additional normal distributions, one for each uncertainty (4 in total in our case, signal, background, and two position uncertainties). Each adds an additional parameter, a 'nuisance parameter'.

To illustrate the details, let's look at the case of adding a single nuisance parameter. In particular we'll look at the nuisance parameter for the signal as it requires more care. The idea is to express our uncertainty of a parameter – in this case the signal – by introducing an additional parameter \(s_i'\). In contrast to \(s_i\) it describes a possible other value of \(s_i\) due to our systematic uncertainty. For simplicity we rewrite our likelihood \(\mathcal{L}\) as \(\mathcal{L}'(s_i, s_i', b_i)\):

\[ \mathcal{L}' = e^{-s'_\text{tot}} \prod_i \left(1 + \frac{s_i'}{b_i}\right) · \exp\left[-\frac{1}{2} \left(\frac{s_i' - s_i}{σ_s'}\right)² \right] \]

where \(s_i'\) takes the place of the \(s_i\). The added gaussian then provides a penalty for any deviation from \(s_i\). The standard deviation of the gaussian \(σ_s'\) is the actual systematic uncertainty on our parameter \(s_i\) in units of \(s_i\).

This form of adding a secondary parameter \(s_i'\) of the same units as \(s_i\) is not the most practical, but maybe provides the best explanation as to how the name 'penalty term' arises for the added gaussian. If \(s_i = s_i'\) then the exponential term is \(1\) meaning the likelihood remains unchanged. For any other value the exponential is \(< 1\), decreasing the likelihood \(\mathcal{L}'\).

By a change of variables we can replace the "unitful" parameter \(s_i'\) by a unitless number \(ϑ_s\). We would like the exponential to be \(\exp(-ϑ_s²/(2 σ_s²))\) to only express deviation from our best guess \(s_i\). \(ϑ_s = 0\) means no deviation and \(|ϑ_s| = 1\) implies \(s_i = -s_i'\). Note that the standard deviation of this is now \(σ_s\) and not \(σ_s'\) as seen in the expression above. This \(σ_s\) corresponds to our systematic uncertainty on the signal as a percentage.

To arrive at this expression:

\begin{align*} \frac{s_i' - s_i}{σ_s'} &= \frac{ϑ_s}{σ_s} \\ \Rightarrow s_i' &= \frac{σ_s'}{σ_s} ϑ_s + s_i \\ \text{with } s_i &= \frac{σ_s'}{σ_s} \\ s_i' &= s_i + s_i ϑ_s \\ \Rightarrow s_i' &= s_i (1 + ϑ_s) \\ \end{align*}where we made use of the fact that the two standard deviations are related by the signal \(s_i\) (which can be seen by defining \(ϑ_s\) as the normalized difference \(ϑ_s = \frac{s'_i - s_i}{s_i}\)).

This results in the following final (single nuisance parameter) likelihood \(\mathcal{L}'\):

\[ \mathcal{L}' = e^{-s'_\text{tot}} \prod_i \left(1 + \frac{s_i'}{b_i}\right) · \exp\left[-\frac{1}{2} \left(\frac{ϑ_s}{σ_s}\right)² \right] \]

where \(s_i' = s_i (1 + ϑ_s)\) and similarly \(s_{\text{tot}}' = s_{\text{tot}} ( 1 + ϑ_s )\) (the latter just follows because \(1 + ϑ_s\) is a constant under the different channels \(i\)).

The same approach is used to encode the background systematic uncertainty. The position uncertainty is generally handled the same, but the \(x\) and \(y\) coordinates are treated separately.

As shown in eq. \eqref{eq:limit_method_signal_si} the signal \(s_i\) actually depends on the positions \((x_i, y_i)\) of each candidate via the raytracing image \(r\). With this we can introduce the nuisance parameters by replacing \(r\) by an \(r'\) such that \[ r' ↦ r(x_i - x'_i, y_i - y'_i) \] which effectively moves the center position by \((x'_i, y'_i)\). In addition we need to add penalty terms for each of these introduced parameters:

\[ \mathcal{L}' = \exp[-s] \cdot \prod_i \left(1 + \frac{s'_i}{b_i}\right) \cdot \exp\left[-\left(\frac{x_i - x'_i}{\sqrt{2}σ} \right)² \right] \cdot \exp\left[-\left(\frac{y_i - y'_i}{\sqrt{2}σ} \right)² \right] \]

where \(s'_i\) is now the modification from above using \(r'\) instead of \(r\). Now we perform the same substitution as we do for \(ϑ_b\) and \(ϑ_s\) to arrive at:

\[ \mathcal{L}' = \exp[-s] \cdot \prod_i \left(1 + \frac{s'_i}{b_i}\right) \cdot \exp\left[-\left(\frac{ϑ_x}{\sqrt{2}σ_x} \right)² \right] \cdot \exp\left[-\left(\frac{ϑ_y}{\sqrt{2}σ_y} \right)² \right] \]

The substitution for \(r'\) means the following for the parameters: \[ r' = r\left(x (1 + ϑ_x), y (1 + ϑ_y)\right) \] where essentially a deviation of \(|ϑ| = 1\) means we move the center of the axion image to the edge of the chip.

Putting all these four nuisance parameters together we have

\begin{align} \label{eq:limit_method:likelihood_function_def} \mathcal{L}' &= \left(\prod_i \frac{P_{\text{pois}}(n_i; s_i + b_i)}{P_{\text{pois}}(n_i; b_i)}\right) \cdot \mathcal{N}(ϑ_s, σ_s) \cdot \mathcal{N}(ϑ_b, σ_b) \cdot \mathcal{N}(ϑ_x, σ_x) \cdot \mathcal{N}(ϑ_y, σ_y) \\ \mathcal{L}'(g, ϑ_s, ϑ_b, ϑ_x, ϑ_y) &= e^{-s'_\text{tot}} \prod_i \left(1 + \frac{s_i''}{b_i'} \right) · \exp\left[-\frac{1}{2} \left(\frac{ϑ_s}{σ_s}\right)² -\frac{1}{2} \left(\frac{ϑ_b}{σ_b}\right)² -\frac{1}{2} \left(\frac{ϑ_x}{σ_x}\right)² -\frac{1}{2} \left(\frac{ϑ_y}{σ_y}\right)² \right] \end{align}where here the doubly primed \(s_i''\) refers to modification for the signal nuisance parameter as well as for the position uncertainty via \(r'\).

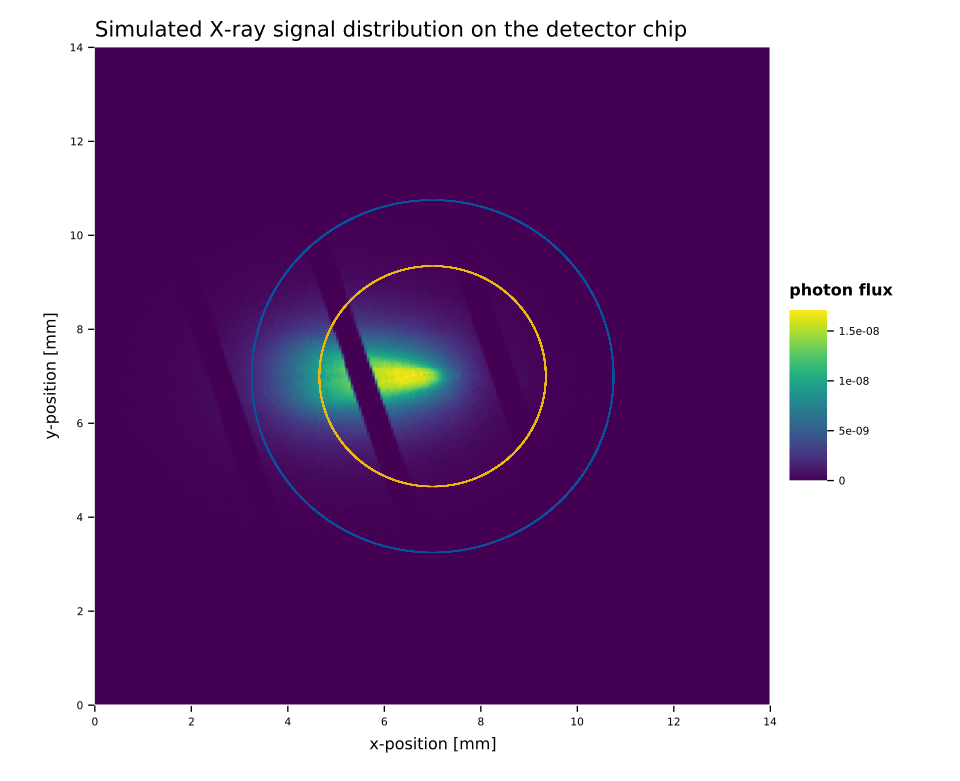

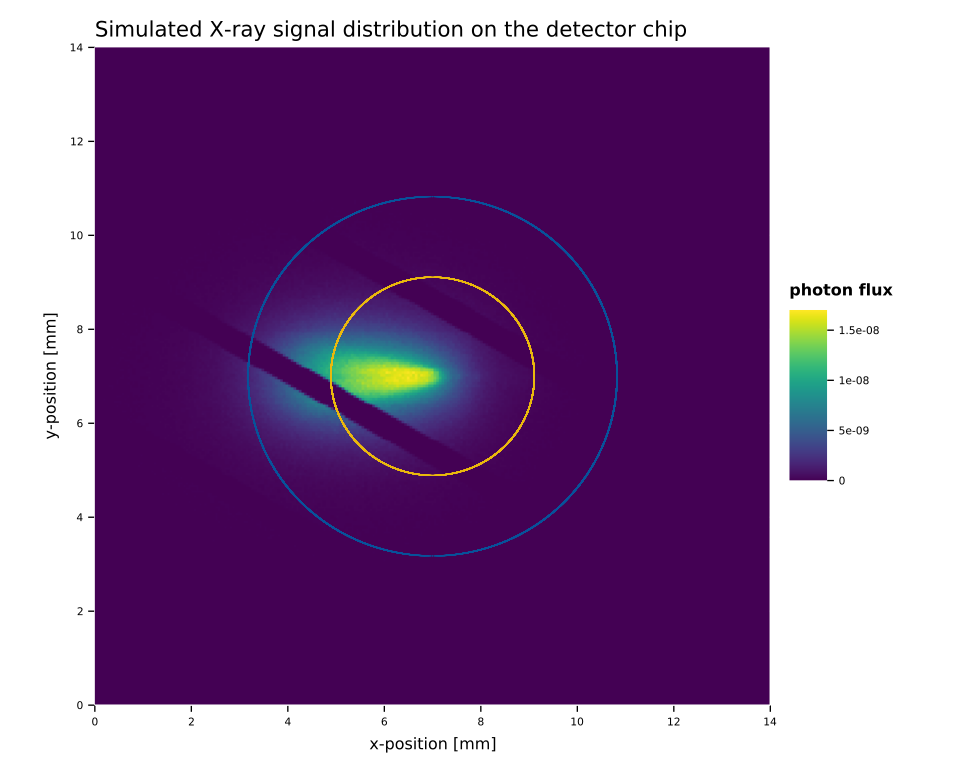

An example of the impact of the nuisance parameters on the likelihood space as well as on the parameters (\(s, b, x, y\)) is shown in fig. 2. First, fig. 2(a) shows how the axion image moves when \(ϑ_{x,y}\) change, in this example \(ϑ_{x,y} = 0.6\) moves the image center to the bottom left (\(ϑ_{x,y} = 1\) would move the center into the corner). Note that the window strongback is not tied to the axion image, but remains fixed (the cut out diagonal lines). Fig. 2(b) and 2(c) show the impact of the nuisance parameters on the likelihood space. The larger the standard deviation \(σ_{x,y}\) is, the more of the \(ϑ_{x,y}\) space contributes meaningfully to \(\mathcal{L}_M\). In the former example – a realistic uncertainty – only small regions around the center are allowed to contribute. Regions further outside receive too large of a penalty. However, at large uncertainties significant regions of the parameter space play an important role. Given that each point on the figures 2(b) and 2(c) describes one axion image like 2(a), brighter regions imply positions where the axion image is moved to parts that provide a larger \(s/b\) in the center portion of the axion image, while still only having a small enough penalty. For the realistic uncertainty, \(σ = 0.05\), roughly the inner \(-0.1 < ϑ < 0.1\) space contributes. This corresponds to a range of \(\SI{-0.7}{mm} < x < \SI{0.7}{mm}\) around the center in fig. 2(a).

13.6.1. Example for systematics extended

[ ]THINK ABOUT IF THIS IN THESIS!

For example assuming we had these systematics (expressed as relative numbers from the best guess):

- signal uncertainties:

- magnet length: \(\SI{0.2}{\%}\)

- magnet bore diameter: \(\SI{2.3}{\%}\)

- window thickness: \(\SI{0.6}{\%}\)

- position uncertainty (of where the axion image is projected):

- detector alignment: \(\SI{5}{\%}\)

- background uncertainty:

- A: \(\SI{0.5}{\%}\) (whatever it may be, all real ones of mine are very specific)

From here we compute 3 combined systematics:

- \(σ_s = \sqrt{ 0.2² + 2.3² + 0.6²} = \SI{2.38}{\%}\)

- \(σ_p = \SI{5}{\%}\)

- \(σ_b = \SI{0.5}{\%}\)

13.6.2. Generate plots of systematic extended

The left most image in fig. 2

is created as part of the --raytracing sanity check. The other two

are part of the likelihoodSystematics sanity check (from the

plotLikelihoodCurves proc via calcPosition for either the "few" or

"many" candidates case.

We place these into a separate directory, because for this particular

set of plots we wish to produce them with a target width of

0.3333\textwidth.

F_WIDTH=0.33333333333 DEBUG_TEX=true ESCAPE_LATEX=true USE_TEX=true \

mcmc_limit_calculation sanity \

--limitKind lkMCMC \

--axionModel ~/org/resources/axionProduction/axionElectronRealDistance/solar_axion_flux_differential_g_ae_1e-13_g_ag_1e-12_g_aN_1e-15_0.989AU.csv \

--axionImage ~/phd/resources/axionImages/solar_axion_image_fkAxionElectronPhoton_0.989AU_1492.93mm.csv \

--combinedEfficiencyFile ~/org/resources/combined_detector_efficiencies.csv \

--switchAxes \

--sanityPath ~/phd/Figs/limit/sanity/fWidth0.3/ \

--likelihoodSystematics \

--raytracing \

--rombergIntegrationDepth 3

13.6.3. \(s'\) is equivalent to \(s_i'\) ? extended

so indeed, this is perfectly valid.

13.7. Limit method - evaluating \(\mathcal{L}\) with nuisance parameters

The likelihood function we started with \(\mathcal{L}\) was only a function of the coupling constant \(g\) we want to compute a limit for. With the inclusion of the four nuisance parameters however, \(\mathcal{L}'\) is now a function of 5 parameters, \(\mathcal{L}'(g, ϑ_s, ϑ_b, ϑ_x, ϑ_y)\). Following our definition of a limit via a fixed percentile of the integral over the coupling constant, eq. \eqref{eq:limit_method:limit_def}, leads to a problem for \(\mathcal{L}'\). If anything, one could define a contour describing the 95-th percentile of the "integral volume", but this would lead to infinitely many values of \(g\) that describe said contour.

As a result, to still define a sane limit value, the concept of the marginal likelihood function \(\mathcal{L}'_M\) is introduced. The idea is to integrate out the nuisance parameters

\begin{equation} \label{eq:limit:method_mcmc:L_integral} \mathcal{L}'_M(g) = \iiiint_{-∞}^∞ \mathcal{L}'(g, ϑ_s, ϑ_b,ϑ_x,ϑ_y)\, \dd ϑ_s \dd ϑ_b \dd ϑ_x \dd ϑ_y. \end{equation}Depending on the exact definition of \(\mathcal{L}'\) in use, these integrals may be analytically computable. In many cases however they are not and numerical techniques to evaluate the integral must be utilized.

Aside from the technical aspects about how to evaluate \(\mathcal{L}'_M(g)\) at a specific \(g\), the limit calculation continues exactly as for the case without nuisance parameters once \(\mathcal{L}'_M(g)\) is defined as such.

- Practical calculation of \(\mathcal{L}'_M(g)\) in our case

In case of our explicit likelihood function eq. \eqref{eq:limit_method:likelihood_function_def} there is already one particular case that makes the marginal likelihood not analytically integrable because the \(b_i' = b_i(1 + ϑ_b)\) term introduces a singularity for \(ϑ_b = -1\). For practical purposes this is not too relevant, as values approaching \(ϑ_b = -1\) would imply having zero background and within a reasonable systematic uncertainty the penalty term makes contributions in this limit so small such that this area does not physically contribute to the integral.

Using standard numerical integration routines (simpson, adaptive Gauss-Kronrod etc.) are all too expensive to compute such a four-fold integration under the context of computing many toy limits for an expected limit. For this reason Monte Carlo approaches are used, in particular the Metropolis-Hastings (Metropolis et al. 1953; Hastings 1970) (MH) Markov Chain Monte Carlo (MCMC). The basic idea of general Monte Carlo integration routines is to evaluate the function at random points and computing the integral based on the function evaluation at these points (by scaling the evaluations correctly). Unless the function is very 'spiky' in the integration space, Monte Carlo approaches provide good accuracy at a fraction of the computational effort as normal numerical algorithms even in higher dimensions. However, we can do better than relying on fully random points in the integration space. The Metropolis-Hastings algorithm tries to evaluate the function more often in those points where the contributions are large. The basic idea is the following:

Pick a random point in the integration space as a starting point \(p_0\). Next, pick another random point \(p_1\) within the vicinity of \(p_0\). If the function \(f\) evaluates to a larger value at \(p_1\), accept it as the new current position. If it is smaller, accept it with a probability of \(\frac{f(p_i)}{f(p_{i-1})}\) (i.e. if the new value is close to the old one we accept it with a high probability and if the new one is much lower accept it rarely). This guarantees to pick values inching closer to the most contributing areas of the integral in the integration space, while still allowing to get out of local maxima due to the random acceptance of "worse" positions. However, this also implies that regions of constant \(\mathcal{L}\) (regions where the values are close to 0, but also generally 'flat' regions) produce a pure random walk from the algorithm, because \(\frac{f(p_i)}{f(p_{i-1})} \approx 1\) in those regions. This needs to be taken into account.

If a new point is accepted and becomes the current position, the "chain" of points is extended (hence "Markov Chain"). If a point is rejected, extend the chain by duplicating the last point. By creating a chain of reasonable length, the integration space is sampled well. Because the initial point is completely random (up to some possible prior) the first \(N\) links of the chain are in a region of low interest (and depending on the interpretation of the chain "wrong"). For that reason one defines a cutoff \(N_b\) of the first elements that are thrown away as "burn-in" before using the chain to evaluate the integral or parameters.

In addition it can be valuable to not only start a single Markov Chain from one random point, but instead start multiple chains from different points in the integration space. This increases the chance to cover different regions of interest even in the presence of multiple peaks separated too far away to likely "jump over" via the probabilistic acceptance. As such it reduces bias from the starting sampling.

To summarize the algorithm:

- let \(\vec{p}\) be a random vector in the integration space and \(f(\vec{p})\) the function to evaluate,

- pick new point \(\vec{p}'\) in vicinity of \(\vec{p}\),

- sample from random uniform in \([0, 1]\): \(u\),

- accept \(\vec{p}'\) if \(u < \frac{f(\vec{p}')}{f(\vec{p})}\), add \(\vec{p}'\) to chain and iterate (if \(f(\vec{p}') > f(\vec{p})\) every new link accepted!). If rejected, add \(\vec{p}\) again,

- generate a long enough chain to sample the integration space well,

- throw away first N elements as "burn in",

- generate multiple chains to be less dependent on starting position.

Applied to eq. \eqref{eq:limit:method_mcmc:L_integral}, we obtain \(\mathcal{L}_M(g)\) by computing the histogram of all sampled \(g\) values, which are one component of the vector \(\vec{p}\). More on that in sec. 13.12.

13.8. Note about likelihood integral extended

The likelihood is a product of probability density functions. However, it is important to note that the likelihood is a function of the parameter and not the data. As such integrating over all parameters does not necessarily equate to 1!

13.9. Derivation of short form of \(\mathcal{L}\) [/] extended

[ ]WRITE THE NON LOG FORM

This uses the logarithm form, but the non log form is even easier actually.

\begin{align*} \ln \mathcal{\mathcal{L}} &= \ln \prod_i \frac{ \frac{(s_i + b_i)^{n_i}}{n_i!} e^{-(s_i + b_i)} }{ \frac{b_i^{n_i}}{n_i!} e^{-b_i} } \\ &= \sum_i \ln \frac{ \frac{(s_i + b_i)^{n_i}}{n_i!} e^{-(s_i + b_i)} }{ \frac{b_i^{n_i}}{n_i!} e^{-b_i} } \\ &= \sum_i \ln \frac{(s_i + b_i)^{n_i}}{n_i!} e^{-(s_i + b_i)} - \ln \frac{b_i^{n_i}}{n_i!} e^{-b_i} \\ &= \sum_i n_i \ln (s_i + b_i) - \ln n_i! - (s_i + b_i) - (n_i \ln b_i - \ln n_i! -b_i) \\ &= \sum_i n_i \ln (s_i + b_i) - (s_i + b_i) - n_i \ln b_i + b_i \\ &= \sum_i n_i \ln (s_i + b_i) - (s_i + b_i - b_i) - n_i \ln b_i \\ &= \sum_i n_i \ln \left(\frac{s_i + b_i}{b_i}\right) - s_i \\ &= -s_{\text{tot}} + \sum_i n_i \ln \left(\frac{s_i + b_i}{b_i} \right) \\ &\text{or alternatively} \\ &= -s_{\text{tot}} + \sum_i n_i \ln \left(1 + \frac{s_i}{b_i} \right) \\ \end{align*}13.10. Likelihood ingredients in detail

To reiterate, the likelihood function we finally evaluate using MCMC, with explicit dependency on the coupling constant we intend to (mainly) consider – the axion-electron coupling \(g_{ae}\) – can be written as

\[ \mathcal{L'}_{M}(g²_{ae}) = \iiiint_{-∞}^∞ e^{-s'_{\text{tot}}(g²_{ae})} · \prod_i \left(1 +\frac{s_i''(g²_{ae})}{b_i'}\right) · \exp\left[ -\frac{ϑ_b²}{2 σ_b²} -\frac{ϑ_s²}{2 σ_s²} -\frac{ϑ_x²}{2σ_x²} -\frac{ϑ_y²}{2 σ_y²} \right] \, \dd ϑ_b \dd ϑ_s \dd ϑ_x \dd ϑ_y, \]

where \(i\) runs over all candidates. We alluded to the general make up of both the signal terms \(s_{\text{tot}}\) and \(s_i\) as well as the background \(b_i\) in sec. 13.3. Let us now look at what goes into each of these explicitly and how they are calculated, starting with each of the signal contributions in

\[ s_i(g²_{ae}) = f(g²_{ae}, E_i) · A · t · P_{a \rightarrow γ}(g²_{aγ}) · ε(E_i) · r(x_i, y_i), \]

sec. 13.10.1 to sec. 13.10.7 and the background in sec. 13.10.8. Finally, sec. 13.10.9 explains how we sample toy candidate sets.

13.10.1. Magnet bore and solar tracking time - \(A\), \(t\)

Starting with the simplest inputs to the signal, the magnet bore area and the solar tracking time. The CAST magnet has a bore diameter of \(d_{\text{bore}} = \SI{43}{mm}\), as introduced in sec. 5.1. The relevant area for the solar axion flux is the entire magnet bore, because the X-ray telescope covers the full area. As such, \(A\) is a constant of:

\[ A = π (\SI{21.5}{mm})² = \SI{1452.2}{mm²}. \]

The time of interest is the total solar tracking duration, in which the detector was sensitive (i.e. removing the dead time due to readout). As given in the CAST data taking overview, sec. 10.6, the amount of active solar tracking time is

\[ t = \SI{160.38}{h}. \]

13.10.2. Solar axion flux - \(f(g, E_i)\)

The solar axion flux is based on the calculations by J. Redondo (Redondo 2013) as already introduced in sec. 4.5. The \(f(g², E_i)\) term of the signal refers to the differential solar axion flux. The flux, fig. 3(a), is computed for a specific axion model and coupling constant, in this case \(g_{\text{ref}} = g_{ae} = \num{1e-13}\) and \(g_{aγ} = \SI{1e-12}{GeV^{-1}}\). As the flux scales by the coupling constant squared, it is rescaled to a new coupling constant \(g²_{ae}\) by

\[ f(g²_{ae}, E_i) = f(g²_{ae, \text{ref}}, E_i) · \frac{g²_{ae}}{g²_{\text{ref}, ae}}. \]

\(g_{aγ}\) is kept constant. At this ratio of the two coupling constants, the axion-photon flux is negligible.

The shown differential flux is computed using a Sun to Earth distance of \(d_{S⇔E} = \SI{0.989}{AU}\) due to the times of the year in which solar trackings were taken at CAST. Fig. 3(b) shows the distance between Sun and Earth during the entire data taking period, with the solar trackings marked in green. The data for the distance is obtained using the JPL Horizons API (“JLP Horizons” 2023).

The code used to calculate the differential flux, (Von Oy 2023) 6, can also be used to compute the flux for other axion models, for example a pure axion-photon coupling model.

13.10.2.1. Generate solar axion flux plot and distance Sun-Earth extended

- Use Horizons API to download data for distance during CAST data taking

See ./../org/journal.html -> notes about writing the below code. See ./../org/journal.html -> notes on development of the

horizonsapiNim library that we use below.First we download the distance between the Sun and Earth during the data taking campaign at CAST (between Jan 2017 and Dec 2019; we could be more strict, but well).

This is done using https://github.com/SciNim/horizonsAPI, a simple library to interface with JPL's Horizons API. An API that allows to access all sorts of data about the solar system.

import horizonsapi, datamancer, times let startDate = initDateTime( 01, mJan, 2017, 00, 00, 00, 00, local() ) let stopDate = initDateTime( 31, mDec, 2019, 23, 59, 59, 00, local() ) let nMins = (stopDate - startDate).inMinutes() const blockSize = 85_000 # max line number somewhere above 90k. Do less to have some buffer let numBlocks = ceil(nMins.float / blockSize.float ).int # we end up at a later date than ` stopDate `, but that's fine echo numBlocks let blockDur = initDuration(minutes = blockSize) let comOpt = { # coFormat : "json", # data returned as "fake" JSON coMakeEphem : "YES", coCommand : "10", # our target is the Sun, index 10 coEphemType : "OBSERVER" }.toTable # observational parameters var ephOpt = { eoCenter : "coord@399", # observational point is a coordinate on Earth (Earth idx 399) eoStartTime : startDate.format( "yyyy-MM-dd" ), eoStopTime : (startDate + blockDur).format( "yyyy-MM-dd" ), eoStepSize : "1 MIN", # in 1 min steps eoCoordType : "GEODETIC", eoSiteCoord : "+6.06670,+46.23330,0", # Geneva eoCSVFormat : "YES" }.toTable # data as CSV within the JSON (yes, really) var q: Quantities q.incl 20 ## Observer range! In this case range between our coordinates on Earth and target var reqs = newSeq [ HorizonsRequest ]() for i in 0 ..< numBlocks: # modify the start and end dates ephOpt[eoStartTime] = (startDate + i * blockDur).format( "yyyy-MM-dd" ) ephOpt[eoStopTime] = (startDate + (i+1 ) * blockDur).format( "yyyy-MM-dd" ) echo "From : ", ephOpt[eoStartTime], " to ", ephOpt[eoStopTime] reqs.add initHorizonsRequest(comOpt, ephOpt, q) let res = getResponsesSync(reqs) proc convertToDf (res: seq [ HorizonsResponse ] ): DataFrame = result = newDataFrame() for r in res: result.add parseCsvString(r.csvData) let df = res.convertToDf().unique( "Date__(UT)__HR:MN" ) .select( [ "Date__(UT)__HR:MN", "delta", "deldot" ] ) echo df df.writeCsv( "/home/basti/phd/resources/sun_earth_distance_cast_datataking.csv", precision = 16 ) - Generate plot of distance with CAST trackings marked

See again ./../org/journal.html

With the CSV file produced in the previous section we can now plot the CAST trackings (from the TimepixAnalysis

resourcesdirectory) against it.Note: We need to use the same plot height as for the differential axion flux produced in sec. 4.5.2. Height not defined, width 600 (golden ratio).

import ggplotnim, sequtils, times, strutils, strformat # 2017-Jan-01 00:00 const Format = "yyyy-MMM-dd HH:mm" const OrgFormat = "'<'yyyy-MM-dd ddd H:mm'>'" const p2017 = "~/CastData/ExternCode/TimepixAnalysis/resources/DataRuns2017_Reco_tracking_times.csv" const p2018 = "~/CastData/ExternCode/TimepixAnalysis/resources/DataRuns2018_Reco_tracking_times.csv" var df = readCsv( "~/phd/resources/sun_earth_distance_cast_datataking.csv" ) .mutate(f{ string -> int: "Timestamp" ~ parseTime(idx( "Date__(UT)__HR:MN" ).strip, Format, local() ).toUnix.int } ) proc readRuns (f: string ): DataFrame = result = readCsv(f) .mutate(f{ string -> int: "TimestampStart" ~ parseTime(idx( "Tracking start" ), OrgFormat, local() ).toUnix.int } ) .mutate(f{ string -> int: "TimestampStop" ~ parseTime(idx( "Tracking stop" ), OrgFormat, local() ).toUnix.int } ) var dfR = readRuns(p2017) dfR.add readRuns(p2018) var dfHT = newDataFrame() for tracking in dfR: let start = tracking[ "TimestampStart" ].toInt let stop = tracking[ "TimestampStop" ].toInt dfHT.add df.filter(f{ int: `Timestamp` >= start and `Timestamp` <= stop} ) dfHT[ "Type" ] = "Trackings" df[ "Type" ] = "HorizonsAPI" df.add dfHT let deltas = dfHT[ "delta", float ] let meanD = deltas.mean let varD = deltas.variance let stdD = deltas.std echo "Mean distance during trackings = ", meanD echo "Variance of distance during trackings = ", varD echo "Std of distance during trackings = ", stdD # and write back the DF of the tracking positions # dfHT.writeCsv("~/phd/resources/sun_earth_distance_cast_solar_trackings.csv") let texts = @[r"$μ_{\text{distance}} = " & &"{meanD:.4f}$", # r"$\text{Variance} = " & &"{varD:.4g}$", r"$σ_{\text{distance}} = " & &"{stdD:.4f}$" ] let annot = texts.join(r"\\" ) echo "Annot: ", annot proc thm (): Theme = result = sideBySide() result.annotationFont = some(font( 7.0 ) ) # we don't want monospace font! ggplot(df, aes( "Timestamp", "delta", color = "Type" ) ) + geom_line(data = df.filter(f{`Type` == "HorizonsAPI" } ) ) + geom_point(data = df.filter(f{`Type` == "Trackings" } ), size = 1.0 ) + scale_x_date(isTimestamp = true, formatString = "yyyy-MM", dateSpacing = initDuration(days = 90 ) ) + xlab( "Date", rotate = -45.0, alignTo = "right", margin = 3.0 ) + annotate(text = annot, x = 1.5975e9, y = 1.0075 ) + ggtitle( "Distance in AU Sun ⇔ Earth" ) + legendPosition( 0.7, 0.2 ) + themeLatex(fWidth = 0.5, width = 600, baseTheme = thm, useTeX = true ) + margin(left = 3.5, bottom = 3.75 ) + ggsave( "~/phd/Figs/systematics/sun_earth_distance_cast_solar_tracking.pdf", width = 600, height = 360, dataAsBitmap = true )

13.10.3. Conversion probability - \(P_{aγ}(g²_{aγ})\)

The conversion probability of the arriving axions is simply a constant factor, depending on \(g_{aγ}\), see section 4.4 for the derivation from the general formula. The simplified expression for coherent conversion 7 in a constant magnetic field 8 is

\[ P(g²_{aγ}, B, L) = \left(\frac{g_{aγ} \cdot B \cdot L}{2}\right)^2 \] where the relevant numbers for the CAST magnet are:

\begin{align*} B &= \SI{8.8}{T} &↦ B_{\text{natural}} &= \SI{1719.1}{eV^2} \\ L &= \SI{9.26}{m} &↦ L_{\text{natural}} &= \SI{4.69272e7}{eV^{-1}}. \end{align*}The magnetic field is taken from the CAST slow control log files and matches the values used in the paper of CAST CAPP (Adair et al. 2022) (in contrast to some older papers which assumed \(\SI{9}{T}\), based on when the magnet was still intended to be run at above \(\SI{13000}{A}\)).

Assuming a fixed axion-photon coupling of \(g_{aγ} = \SI{1e-12}{GeV^{-1}}\) the conversion probability comes out to:

\begin{align*} P(g²_{aγ}, B, L) &= \left(\frac{g_{aγ} \cdot B \cdot L}{2}\right)^2 \\ &= \left(\frac{\SI{1e-12}{GeV^{-1}} \cdot \SI{1719.1}{eV^2} \cdot \SI{4.693e7}{eV^{-1}}}{2}\right)^2 \\ &= \num{1.627e-21} \end{align*}13.10.3.1. Computing conversion factors and comparing natural to SI eq. extended

The conversion factors from Tesla and meter to natural units are as follows:

import unchained

echo "Conversion factor Tesla: ", 1.T.toNaturalUnit()

echo "Conversion factor Meter: ", 1.m.toNaturalUnit()

Conversion factor Tesla: 195.353 ElectronVolt² Conversion factor Meter: 5.06773e+06 ElectronVolt⁻¹

TODO: Move this out of the thesis and just show the numbers in text? Keep the "derivation / computation" for the "full" version (:noexport: ?).

As such, the resulting conversion probability ends up as:

import unchained, math

echo "8.8 T = ", 8.8.T.toNaturalUnit()

echo "9.26 m = ", 9.26.m.toNaturalUnit()

echo "P = ", pow( 1e-12.GeV⁻¹ * 8.8.T.toNaturalUnit() * 9.26.m.toNaturalUnit() / 2.0, 2.0 )

8.8 T = 1719.1 eV² 9.26 m = 4.69272e+07 eV⁻¹ P = 1.627022264358953e-21

\begin{align*} P(g_{aγ}, B, L) &= \left(\frac{g_{aγ} \cdot B \cdot L}{2}\right)^2 \\ &= \left(\frac{\SI{1e-12}{\per GeV} \cdot \SI{1719.1}{eV^2} \cdot \SI{4.693e7}{eV}}{2}\right)^2 \\ &= \num{1.627e-21} \end{align*}Note that this is of the same (inverse) order of magnitude as the flux of solar axions (\(\sim10^{21}\) in some sensible unit of time), meaning the experiment expects \(\mathcal{O}(1)\) counts, which is sensible.

import unchained, math

echo "8.8 T = ", 8.8.T.toNaturalUnit()

echo "9.26 m = ", 9.26.m.toNaturalUnit()

echo "P(natural) = ", pow( 1e-12.GeV⁻¹ * 8.8.T.toNaturalUnit() * 9.26.m.toNaturalUnit() / 2.0, 2.0 )

echo "P(SI) = ", ε0 * (hp / ( 2*π) ) * (c^3 ) * (1e-12.GeV⁻¹ * 8.8.T * 9.26.m / 2.0 )^2

As we can see, both approaches yield the same numbers, meaning the additional conversion factors are correct.

13.10.4. Detection efficiency - \(ε(E_i)\)

The detection efficiency \(ε(E_i)\) includes multiple aspects of the full setup. It can be further decomposed into the telescope efficiency, window transparency, gas absorption, software efficiency of the classifier and veto efficiency,

\[ ε(E_i) = ε_{\text{telescope}}(E_i) · ε_{\text{window}}(E_i) · ε_{\text{gas}}(E_i) · ε_{\text{software eff.}} · ε_{\text{veto eff.}}. \]

The first three are energy dependent and the latter two constants, but dependent on the classifier and veto setup for which we compute limits.

13.10.4.1. Telescope efficiency - \(ε_{\text{telescope}}(E_i)\)

The X-ray telescope further has a direct impact not only on the shape of the axion signal on the readout, but also the total number of X-rays transmitted. The effective transmission of an X-ray telescope is significantly lower than in the optical range. This is typically quoted using the term "effective area". In section 5.1.3 the effective area of the two X-ray optics used at CAST is shown. The term effective area refers to the equivalent area a perfect X-ray telescope (\(\SI{100}{\%}\) transmission) would cover. As such, the real efficiency \(ε_{\text{tel}}\) can be computed by the ratio of the effective area \(A_{\text{eff}}\) and the total area of the optic \(A_{\text{tel}}\) exposed to light.

\[ ε_{\text{tel}}(E) = \frac{A_{\text{eff}}(E)}{A_{\text{tel}}} \]

where the effective area \(A_{\text{eff}}\) depends on the energy. 9 In case of CAST the relevant total area is not actually the cross-sectional area of the optic itself, but rather the exposed area due to the diameter of the magnet coldbore. With a coldbore diameter of \(d_{\text{bore}} = \SI{43}{mm}\) the effective area can be converted to \(ε_{\text{tel}}\).

The resulting effective area is shown in fig. 4 in the next section together with the window transmission and gas absorption.

Note: all publicly available effective areas for the LLNL telescope, meaning (Jakobsen 2015) and (Aznar et al. 2015), are either inapplicable, outdated or unfortunately wrong. Jaime Ruz sent me the simulation results used for the CAST Nature paper (Collaboration and others 2017), which include the effective area. These numbers are used in the figure below and our limit calculation.

- Notes on the effective area extended

Some might say people working with X-ray telescopes prefer the 'effective area' as a measure of efficiency to hide the fact how inefficient X-ray telescopes are, whoops.

Anyway, the effective area of the LLNL telescope is still the biggest mystery to me. If you haven't read the raytracing appendix 37, in particular the section about the LLNL telescope, sec. 37.2, the public information available about the LLNL telescope is either outdated, contradictory or plain wrong.

The PhD thesis of Anders Jakobsen (Jakobsen 2015) contains a plot of the effective area (fig. 4.13 on page 64, 87 of PDF), which peaks near ~10 cm². However, it is unclear what the numbers are actually based on. Likely they describe parallel incoming light. In addition they likely include the initial telescope design of 14 instead of the final 13 shells. Both means the result is an overestimate.

Then, (Aznar et al. 2015), the paper about the telescope at CAST, contains another effective area plot peaking at about 8.2 cm². It is stated the numbers are for an HPD (half power diameter) of 75 arc seconds using a solar axion emission from a 3 arcmin disc size. And yet, apparently these numbers are still an overestimate.

As mentioned in the main text above, I was sent the simulations used for the CAST Nature paper (Collaboration and others 2017) by Jaime Ruz, which contain the axion image and effective area. These numbers peak at only about 7.3 cm²! At the very least this roughly matches the slides from the CAST collaboration meeting on , on slide 36. If one looks at those slides, one might notice that the results on slide 35 for the best model actually peak closer to the aforementioned 8.2 cm². According to Jaime the reason for this is that the higher numbers are based on the full telescope area and the lower numbers only the size of CAST's magnet bore.

This may very well all be true. My personal skepticism is due to two things:

- my general feeling that the numbers are exceptionally low. Essentially the telescope is mostly worse than the ABRIXAS telescope, which just surprises me. But I'm obviously not an X-ray telescope expert.

- more importantly, every attempt of mine to compute the effective area based on the reflectivities of the shells with parallel or realistic solar axion emission yielded numbers quite a bit higher than the data sent to me by Jaime.

One note though: I still need to repeat the effective area calculations for the 'realistic' solar axion emission after fixing a random sampling bug. It may very well affect the result, even though it would surprise me if that explained the difference I saw.

The most likely reason is that simply my simulation is off. Possibly the – mentioned in the slides of the CCM – contamination of hydrcarbons affect the reflectivity so much as to explain the difference.

13.10.4.2. Window transmission and argon gas absorption - \(ε_{\text{window}}(E_i), ε_{\text{gas}}(E_i)\)

The detector entrance window is the next point affecting the possible signal to be detected. The windows, as explained in section 7.9 are made from \(\SI{300}{nm}\) thick silicon nitride with a \(\SI{20}{nm}\) thick aluminium coating. Its transmission is very good down to about \(\SI{1}{keV}\) below which it also starts to degrade rapidly.

While the window also has four \(\SI{500}{μm}\) thick strongbacks which in total occlude about \(\SI{22.2}{\%}\) of the center region, these are not taken into account into the combined detection efficiency. Instead they are handled together with the axion image \(r(x_i, y_i)\) in sec. 13.10.6.

13.10.4.3. Software efficiency and veto efficiency - \(ε_{\text{software eff.}} · ε_{\text{veto eff.}}\)

The software efficiency \(ε_{\text{software eff.}}\) of course depends on the specific setting which is used. Its value will range from somewhere between \SIrange{80}{97}{\%}. The veto efficiencies in principle can also vary significantly depending on the choice of parameters (e.g. whether the 'line veto' uses an eccentricity cutoff or not), but as explained in sec. 12.5.5 the septem and line vetoes are just considered as either yes or no. The FADC veto has also been fixed to a \(1^{\text{st}}\) to \(99^{\text{th}}\) percentile cut on the signal rise time, see sec. 12.5.2.

As such the relevant veto efficiencies are:

\begin{align*} ε_{\text{FADC}} &= \SI{98}{\%} \\ ε_{\text{septem}} &= \SI{83.11}{\%} \\ ε_{\text{line}} &= \SI{85.39}{\%} \\ ε_{\text{septem+line}} &= \SI{78.63}{\%} \end{align*}where the last one corresponds to using both the septem and the line veto at the same time. Considering for example the case of using these vetoes together with a software efficiency of \(\SI{80}{\%}\) we see that the combined efficiency is already only about \(\SI{61.6}{\%}\), which is an extreme loss in sensitivity.

13.10.4.4. Combined detection efficiency - \(ε(E_i)\)

The previous sections cover aspects which affect the detection efficiency of the detector and thus impact the amount of signal available. Combined they yield a detection efficiency as shown in fig. 4. As can be seen, the combined detection efficiency maxes out at about \(\sim\SI{46}{\%}\) around \(\SI{1.5}{keV}\) without taking into account the software and veto efficiencies. If one combines this with using all vetoes at a software efficiency of \(\SI{80}{\%}\), the total detection efficiency of the detector would peak at only \(\SI{28.4}{\%}\) at that energy.

- Generate plot of detection efficiency

[/]extended

NOTE: We also have ./../CastData/ExternCode/TimepixAnalysis/Tools/septemboardDetectionEff/septemboardDetectionEff.nim nowadays for the limit calculation (to produce the CSV file including LLNL effective area).

UPDATE: Updated the code of

septemboardDetectionEffto not include a mention of the 'software eff.' in the title, as that is plain wrong.To produce the CSV file

USE_TEX=true ./septemboardDetectionEff \ --outpath ~/phd/resources/ \ --plotPath ~/phd/Figs/limit/ \ --llnlEff ~/org/resources/llnl_cast_nature_jaime_data/2016_DEC_Final_CAST_XRT/EffectiveArea.txt \ --sep ' 'note the usage of the "correct" effective area file.

[X]Well, do we need the ingredients separately? Not really right? -> No.

We need the effective area (ideally we would compute it! but of course currently we cannot reproduce it :( ).

So just read the extended LLNL file.

[X]Need densities of Aluminium, … -> 2.7 g•cm⁻³[X]Need to update xrayAttenuation to create the plot! -> Done.[X]NEED TO update numericalnim for interpolation![X]NEED TO update seqmath for linspace fixes[X]USE 2016 FINAL EFFECTIVE AREA

13.10.5. Average absorption depth of X-rays

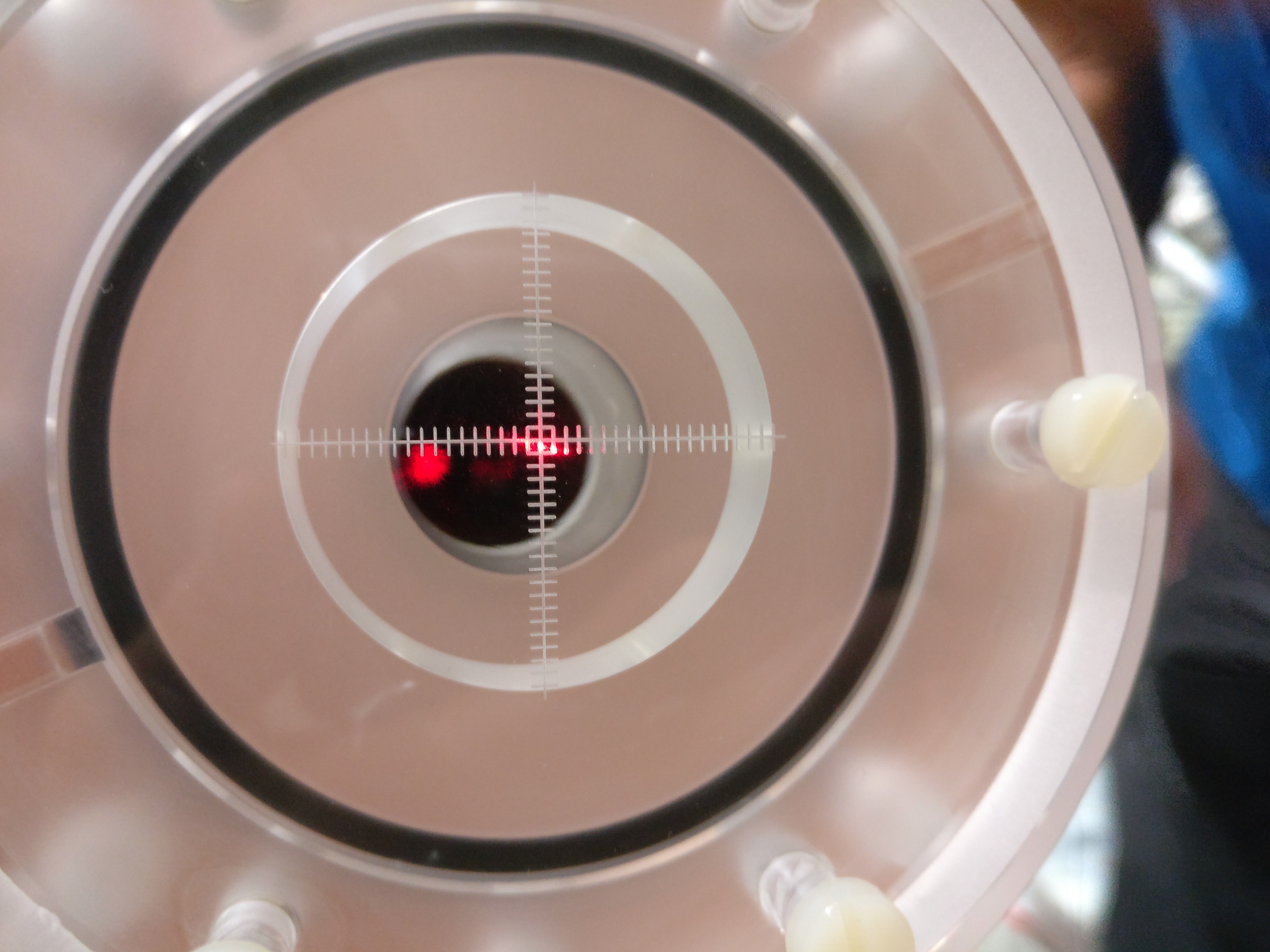

In order to compute a realistic axion image based on raytracing, the plane at which to compute the image needs to be known, as the focal spot size changes significantly depending on the distance to the focal point of the X-ray optics. The beamline behind the telescope is designed such that the focal spot is \(\SI{1}{cm}\) behind the entrance window. 10

This is of particular importance for a gaseous detector, as the raytracing only makes sense up to the generation of a photoelectron, after which the produced primary electrons undergo diffusion. Therefore, one needs to compute the typical absorption depth of X-rays in the relevant energy ranges for the used gas mixture of the detector. This is easiest done based on a Monte Carlo simulation taking into account the incoming X-ray flux distribution (given the solar axion flux we consider) \(f(E)\), the telescope effective area \(ε_{\text{LLNL}}(E)\) and window transmission, \(ε_{\ce{Si3 N4}}(E), ε_{\ce{Al}}(E)\),

\[ I(E) = f(E) · ε_{\text{LLNL}}(E) · ε_{\ce{Si3 N4}}(E) · ε_{\ce{Al}}(E). \]

\(I(E)\) yields the correct energy distribution of expected signal X-rays. For each sampled X-ray we can then draw a conversion point based on the attenuation length and the Beer-Lambert law for its energy introduced in sec. 6.1.1. Computing the median of all conversion points is then an estimator for the point at which to compute the axion image.

Performing this calculation leads to a median conversion point of \(⟨d⟩ = \SI{0.2928}{cm}\) behind the detector window, with a standard deviation of \(\SI{0.4247}{cm}\) due to a long tail from higher energy X-rays. It may be worthwhile to perform this calculation for distinct energies to then compute different axion images for different energies with each their own effective 'depth' behind the window, however for the time being we do not.

For the calculation of these numbers, see appendix 36.

13.10.6. Raytracing axion image - \(r(x_i, y_i)\)

The axion image is computed based on a raytracing Monte Carlo simulation, using TrAXer (Schmidt 2023), written as part of this thesis. Appendix 37 contains an introduction to raytracing techniques, details about the LLNL telescope, verification of the raytracing results using PANTER measurements of the real telescope and details about the calculation of the axion image.

Fig. 5 shows the image, computed for a Sun-Earth distance of \(\SI{0.989}{AU}\) and a distance of \(\SI{0.2928}{cm}\) behind the detector window. So it is \(\SI{0.7072}{cm}\) in front of the focal point. Hence, the image is very slightly asymmetric along the long axis.

Instead of using the raytracing image to fully characterize the axion flux including efficiency losses, we only use it to define the spatial distribution 11. This means we rescale the full axion flux distribution – before taking the window strongback into account – such that it represents the fractional X-ray flux per square centimeter. That way, when we multiply it with the rest of the expression in the signal calculation eq. \eqref{eq:limit_method_signal_si}, the result is the expected number of counts at the given position and energy per \(\si{cm²}\).

The window strongback is not part of the simulation, because for the position uncertainty, we need to move the axion image without moving the strongback. As such the strongback is added as part of the limit calculation based on the physical position on the chip of a given candidate.

13.10.6.1. Generate the axion image plot with strongback extended

In the raytracing appendix we only compute the axion image without the strongback (even though we support placing the strongback into the simulation).

We could either produce the plot based on plotBinary, part of the

TrAXer repository, after running with the strongback in the

simulation, or alternatively as part of the limit calculation sanity

checks. The latter is the cleaner approach, because it directly shows

us the strongback is added correctly in the code where it matters.

We produce it by running the sanity subcommand of

mcmc_limit_calculation, in particular the raytracing argument.

Note that we don't need any input files, the default ones are fine,

because we don't run any input related sanity checks.

F_WIDTH=0.9 DEBUG_TEX=true ESCAPE_LATEX=true USE_TEX=true \

mcmc_limit_calculation sanity \

--limitKind lkMCMC \

--axionModel ~/org/resources/axionProduction/axionElectronRealDistance/solar_axion_flux_differential_g_ae_1e-13_g_ag_1e-12_g_aN_1e-15_0.989AU.csv \

--axionImage ~/phd/resources/axionImages/solar_axion_image_fkAxionElectronPhoton_0.989AU_1492.93mm.csv \

--combinedEfficiencyFile ~/org/resources/combined_detector_efficiencies.csv \

--switchAxes \

--sanityPath ~/phd/Figs/limit/sanity/ \

--raytracing

13.10.7. Computing the total signal - \(s_{\text{tot}}\)

As mentioned in sec. 13.3 in principle we need to integrate the signal function \(s(E, x, y)\) over the entire chip area and all energies. However, we do not actually need to perform that integration, because we know the efficiency of our telescope and detector setup as well as the amount of flux entering the telescope.

Therefore we compute \(s_{\text{tot}}\) via

\[ s_{\text{tot}}(g²_{ae}) = ∫_0^{E_{\text{max}}} f(g²_{ae}, E) · A · t · P_{a ↦ γ}(g²_{aγ}) · ε(E) \, \dd E, \]

making use of the fact that the position dependent function \(r(x, y)\) integrates to \(\num{1}\) over the entire axion image. This allows us to precompute the integral and only rescale the result for the current coupling constant \(g²_{ae}\) via

\[ s_{\text{tot}}(g²_{ae}) = s_{\text{tot}}(g²_{ae,\text{ref}}) · \frac{g²_{ae}}{g²_{ae, \text{ref}}}, \]

where \(g²_{ae, \text{ref}}\) is the reference coupling constant for which the integral is computed initially. Similar rescaling needs to be done for the axion-photon coupling or chameleon coupling, when computing a limit for either.

13.10.7.1. Code for the calculation of the total signal extended

This is the implementation of the totalSignal in code. We simply

circumvent the integration when calculating limits by precomputing the

integral in the initialization (into integralBase), taking into

account the detection efficiency. From there it is just a

multiplication of magnet bore, tracking time and conversion probability.

proc totalSignal (ctx: Context ): UnitLess =

## Computes the total signal expected in the detector, by integrating the

## axion flux arriving over the total magnet bore, total tracking time.

##

## The ` integralBase ` is the integral over the axion flux multiplied by the detection

## efficiency (window, gas and telescope).

const areaBore = π * ( 2.15 * 2.15 ).cm²

let integral = ctx.integralBase.rescale(ctx)

result = integral.cm⁻²•s⁻¹ * areaBore * ctx.totalTrackingTime.to(s) * conversionProbability(ctx)

13.10.8. Background

The background must be evaluated at the position and energy of each cluster candidate. As the background is not constant in energy or position on the chip (see sec. 12.6), we need a continuous description in those dimensions of the background rate.

In order to obtain such a thing, we start from all X-ray like clusters remaining after background rejection, see for example fig. #fig:background:cluster_center_comparison, and construct a background interpolation. We define \(b_i\) as a function of candidate position \(x_i, y_i\) and energy \(E_i\),

\[ b_i(x_i, y_i, E_i) = \frac{I(x_i, y_i, E_i)}{W(x_i, y_i, E_i)}. \]

where \(I\) is an intensity defined over clusters within a range \(R\) and a normalization weight \(W\). From here on we will drop the candidate suffix \(i\). The arguments will be combined to vectors

\[ \mathbf{x} = \vektor{ \vec{x} \\ E } = \vektor{ x \\ y \\ E }. \]

The intensity \(I\) is given by

\[ I(\mathbf{x}) = \sum_{b ∈ \{ \mathcal{D}(\mathbf{x}_b, \mathbf{x}) \leq R \}}\mathcal{M}(\mathbf{x}_b, \mathbf{x}) = \sum_{b ∈ \{ \mathcal{D}(\mathbf{x}_b, \mathbf{x}) \leq R \} } \exp \left[ -\frac{1}{2} \mathcal{D}² / σ² \right], \]

where we introduce \(\mathcal{M}\) to refer to a normal distribution-like measure and \(\mathcal{D}\) to a custom metric (for clarity without arguments). All background clusters \(\mathbf{x}_b\) within some 'radius' \(R\) contribute to the intensity \(I\), weighted by their distance to the point of interest \(\mathbf{x}\). The metric is given by

\begin{equation*} \mathcal{D}( \mathbf{x}_1, \mathbf{x}_2) = \mathcal{D}( (\vec{x}_1, E_1), (\vec{x}_2, E_2)) = \begin{cases} (\vec{x}_1 - \vec{x}_2)² \text{ if } |E_1 - E_2| \leq R \\ ∞ \text{ if } (\vec{x}_1 - \vec{x}_2)² > R² \\ ∞ \text{ if } |E_1 - E_2| > R \end{cases} \end{equation*}with \(\vec{x} = \vektor{x \\ y}\). Note first of all that this effectively describes a cylinder. Any point inside \(| \vec{x}_1 - \vec{x}_2 | \leq R\) simply yields a euclidean distance, as long as their energy is smaller than \(R\). Further note, that the distance is only dependent on the distance in the x-y plane, not their energy difference. Finally, this requires rescaling the energy as a common number \(R\), but in practice the implementation of this custom metric simply compares energies directly, with the 'height' in energy of the cylinder expressed as \(ΔE\).

The commonly used value for the radius \(R\) in the x-y plane are \(R = \SI{40}{pixel}\) and in energy \(ΔE = ± \SI{0.3}{keV}\). The standard deviation of the normal distribution for the weighting in the measure \(σ\) is set to \(\frac{R}{3}\). The basic idea of the measure is simply to provide the highest weight to those clusters close to the point we evaluate and approach 0 at the edge of \(R\) to avoid discontinuities in the resulting interpolation.

Finally, the normalization weight \(W\) is required to convert the sum of \(I\) into a background rate. It is the 'volume' of our measure within the boundaries set by our metric \(\mathcal{D}\):

\begin{align*} W(x', y', E') &= t_B ∫_{E' - E_c}^{E' + E_c} ∫_{\mathcal{D}(\vec{x'}, \vec{x}) \leq R} \mathcal{M}(x', y') \, \dd x\, \dd y\, \dd E \\ &= t_B ∫_{E' - E_c}^{E' + E_c} ∫_{\mathcal{D}(\vec{x'}, \vec{x}) \leq R} \exp\left[ -\frac{1}{2} \mathcal{D}² / σ² \right] \, \dd x \, \dd y \, \dd E \\ &= t_B ∫_{E' - E_c}^{E' + E_c} ∫_0^R ∫_0^{2π} r \exp\left[ -\frac{1}{2} \frac{\mathcal{D}² }{σ²} \right] \, \dd r\, \dd φ\, \dd E \\ &= t_B ∫_{E' - E_c}^{E' + E_c} -2 π \left( σ² \exp\left[ -\frac{1}{2} \frac{R²}{σ^2} \right] - σ² \right) \, \dd E \\ &= -4 π t_B E_c \left( σ² \exp\left[ -\frac{1}{2} \frac{R²}{σ^2} \right] - σ² \right), \\ \end{align*}where we made use of the fact that within the region of interest \(\mathcal{D}'\) is effectively just a radius \(r\) around the point we evaluate. \(t_B\) is the total active background data taking time. If our measure was \(\mathcal{M} = 1\), meaning we would count the clusters in \(\mathcal{D}(\vec{x}, \vec{x}') \leq R\), the normalization \(W\) would simply be the volume of the cylinder.

This yields a smooth and continuous interpolation of the background over the entire chip. However, towards the edges of the chip it underestimates the background rate, because once part of the cylinder is not contained within the chip, fewer clusters contribute. For that reason we correct for the chip edges by upscaling the value within the chip by the missing area. See appendix 32.

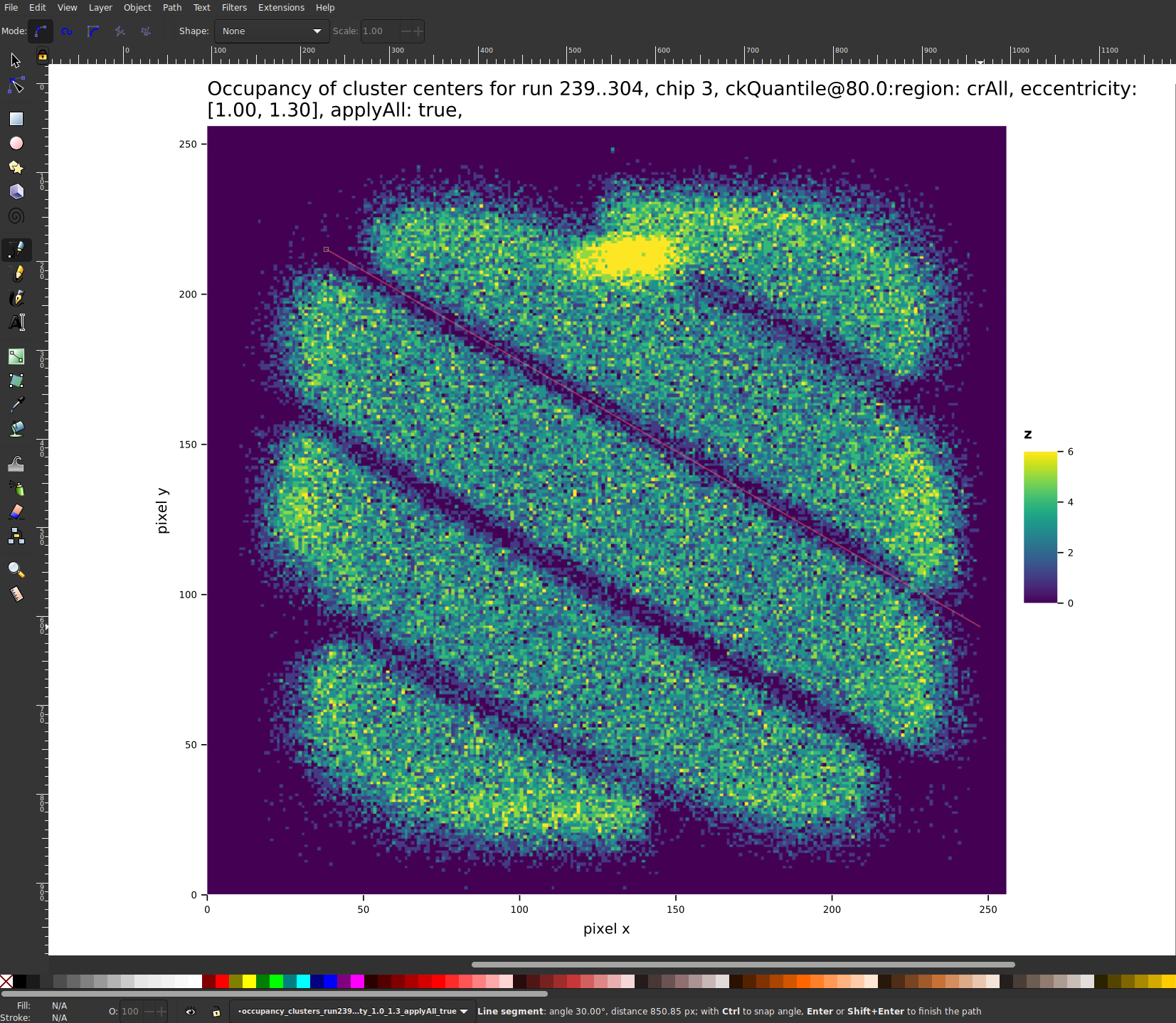

Fig. 6 shows an example of the background interpolation centered at \(\SI{3}{keV}\), with all clusters within a radius of \(\num{40}\) pixels and in an energy range from \(\SIrange{2.7}{3.3}{keV}\). Fig. 6(a) shows the initial step of the interpolation, with all colored points inside the circle being clusters that are contained in \(\mathcal{D} \leq R\). Their color represents the weight based on the measure \(\mathcal{M}\). After normalization and calculation for each point on the chip, we get the interpolation shown in fig. 6(b).

Implementation wise, as the lookup of the closest neighbors in general is an \(N²\) operation for \(N\) clusters, all clusters are stored in a \(k\text{-d}\) tree, for fast querying of clusters close to the point to be evaluated. Furthermore, because the likelihood \(\mathcal{L}\) is evaluated many times for a given set of candidates to compute a limit, we perform caching of the background interpolation values for each candidate. That way we only compute the interpolation once for each candidate.

13.10.8.1. Generate the interpolation figure extended